- Home

- Security, Compliance, and Identity

- Microsoft Sentinel Blog

- Implementing Lookups in Azure Sentinel

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In the blog post “Active Lists out; make_list() in,” @Ofer_Shezaf referred to one of the uses of Active Lists (ArcSight) or reference sets (QRadar), namely implementing correlation. But Active Lists, reference sets, and the appropriately named Lookups in Splunk have another important use: lookups. In this blog post, we will explore lookups in Azure Sentinel.

Azure Sentinel has various methods to perform lookups, enabling diverse sources for the lookup data and different ways to process it. Overall, I think you will find that Azure Sentinel capabilities offer unparalleled lookup prowess.

This post of part of a series of blog posts on writing rules in Azure Sentinel:

- Azure Sentinel correlation rules using lists

- Azure Sentinel correlation rules using the join operator

- Implementing Lookups in Azure Sentinel (this post)

- Approximate, partial, and combined lookups

- Handling sliding windows in Azure Sentinel rules

Why lookups?

Why do you need to look up information in a SIEM query, whether hunting queries or detection rules? The top reasons are:

- Allow-listing - making sure a query ignores known outliers that do not justify alerting on. For example, excluding a backup process from a query identifying access to large amounts of data.

- Watch lists - in reverse, ensure that alerts with the listed entities are promoted, whether by assigning a higher severity or by alerting only on those. For example, flagging access to sensitive finance files.

- Enrichment – extending events or alerts with additional information needed for detection or investigation. Common enrichment sources include user information, asset or resource information, geoinformation, and threat intelligence.

Those are critical capabilities of a SIEM. While each specialized security tool has a partial view of the world, a SIEM can utilize its broader outreach to enrich the event and alert data and centrally manage allow-lists and watch lists.

In this blog post, I examine implementing all those use cases in Azure Sentinel.

Lookup methods

Azure Sentinel provides four methods to reference, import, and use lookup information. The methods are:

- The built-in Watchlists feature, which enables uploading CSV files as lookup tables.

- The externaldata KQL function, which enables referencing an Azure Storage file as a lookup table.

- Custom tables, imported using a custom connector.

- A KQL function utilizing the datatable operator, which can be updated interactively or using PowerShell.

In this article, we discuss each of the latter three, leaving Watchlists to the official documentation, and finish with a comparison of them.

Note that while importing or referencing, the lookup information differs between the methods. Performing the lookup action in KQL is similar, and the examples weaved through work with any lookup method, including Watchlists.

Referencing an Azure Storage file using the KQL externaldata operator

To support a lookup from an external file, KQL offers the "exernaldata" operator. Unlike lookup implementation in other SIEM products, externaldata is not a lookup operator. Instead, externaldata enables using files as if they were Azure Sentinel tables, allowing pre-processing of the file before performing the lookup, such as filtering and parsing. I will demonstrate each in the examples below.

Creating the file

- Create an Azure storage account.

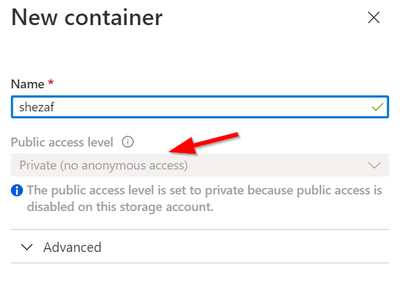

- Create a blob container. Note that the container is private by default and does not allow public access, which is probably what you want.

- As an alternative to blobs, you can also use Azure Files or Azure Tables. The main difference is how you update the files:

- Azure Files allows easy sync to a Windows computer, allowing editing of the lookup table using standard software such as Excel.

- Azure Tables allows table-style editing within the portal.

- Using blobs, you can still edit using the internal Azure Blob storage editor.

- Upload to it your lookup file

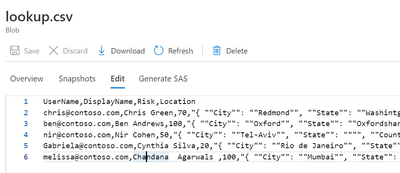

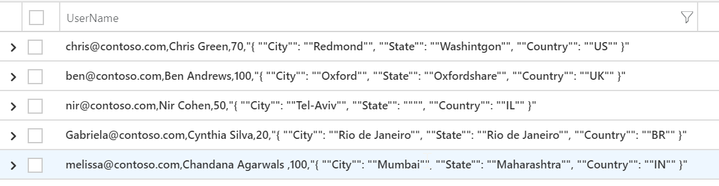

In the following sections, I will use the following CSV file, conveniently created in Excel:

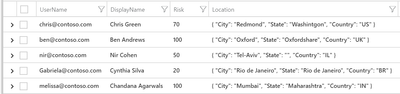

UserName,DisplayName,Risk,Location

chris@contoso.com,Chris Green,70,"{ ""City"": ""Redmond"", ""State"": ""Washintgon"", ""Country"": ""US"" }"

ben@contoso.com,Ben Andrews,100,"{ ""City"": ""Oxford"", ""State"": ""Oxfordshare"", ""Country"": ""UK"" }"

nir@contoso.com,Nir Cohen,50,"{ ""City"": ""Tel-Aviv"", ""State"": """", ""Country"": ""IL"" }"

Gabriela@contoso.com,Cynthia Silva,20,"{ ""City"": ""Rio de Janeiro"", ""State"": ""Rio de Janeiro"", ""Country"": ""BR"" }"

melissa@contoso.com,Chandana Agarwals ,100,"{ ""City"": ""Mumbai"", ""State"": ""Maharashtra"", ""Country"": ""IN"" }"

To get a secure access URL, use the “Get Shared Access Signature…” feature, which generates for you a URL similar to this one:

https://***.blob.core.windows.net/externaldata/users.csv?sp=rl&st=2019-12-23T12:49:20Z&se=2019-12-24T12:49:20Z&sv=2019-02-02&sr=b&sig=***...***

Using the reference file in KQL

Since we start with an allow-list example, we need only the first column from the lookup file and can use the following KQL command to reference the file:

externaldata (UserPrincipalName: string) [h"https://..."] with (ignoreFirstRecord=true)

Let’s examine the query.

- First, we defined the type for the first column in the CSV file. String in this case.

- Next, we provided the URL. Note the “h” added in front of the string: this ensures that we don’t log the string, including sensitive information, to our internal audit logs. For more information on query obfuscation, read here.

- Last, we use the attribute ignoreFirstRecord=true since the first line includes column names and is not relevant.

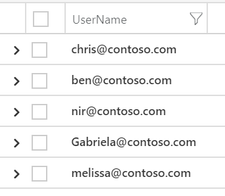

When you run this query, you get this:

Only the first column of the file is used since we defined a type only for it.

The filename extension identifies the file as CSV; however, we could have overridden the type. For example, if we force “txt” as the file type, each file line is considered a single field, and the query returns in the UserName field the entire line. Other supported formats include JSON and AVRO, as well as compressed files.

externaldata (UserPrincipalName: string) [h"https://..."] with (ignoreFirstRecord=true, format="txt")

For a full list of supported file types and other attributes, refer to this page.

Allow-listing users

To implement while listing, let’s take Azure Sentinel’s rules to detect Failed login attempts to Azure Portal. The modified (and abbreviated) query is below, with the modifications marked in yellow:

let timeRange = 1d;

let allowlist = externaldata (UserPrincipalName: string) [h"https://..."] with (ignoreFirstRecord=true);

SigninLogs

| where TimeGenerated >= ago(timeRange)

…

// Exclude non-failure types

| where ResultType !in ("0", "50125", "50140")

// Exclude allow-listed users

| where UserPrincipalName !in~ (allowlist)

…

The first modification defined the allow-list table using the externaldata command. Strictly speaking, you don’t have to define the allow-list table in advance, but it adds a lot to readability. The second modification checked that the UPN is not in the allow-list table. That’s all.

Wonder why the “~”; in the “not in” operator? It means that the comparison is case insensitive.

But what if I want to allow-list by two fields, i.e., use a table with users and apps and allow-list the users only to specific apps. You can do that using concatenation as follows:

...

let allowlist ...

// -- Add the following line, assuming that allowlist included both UserPrincipalName and AppDiscplayName

let allowlistconcat = toscalar (allowlist | summarize make_list(strcat(UserPrincipalName, "|", AppDisplayName)));

SigninLogs

...

// -- Replace the allow-list lookup line with the following

| where strcat(UserPrincipalName, "|", AppDisplayName) !in~ (allowlistconcat)

Note: the "in" operator is limited to 1,000,000 values.

If you need more, use the "join" method described later.

Using the lookup as a watch list

Let’s use the same alert rule to demonstrate a watch list. Login failures are not particularly interesting; however, there might be a few users for which we want to check each failure. Assume that those are the users for which the risk is higher in our lookup table.

Now we need additional fields in the reference table, so let’s extend the fields' mapping. By specifying the other columns in the source file, we make them all available for our queries:

externaldata (UserPrincipalName: string, DisplayName: string, Risk: int, Location: dynamic) [h"https://..."] with (ignoreFirstRecord=true)

Now, by slightly modifying the alert rule query, we implement our watch list:

let timeRange = 1d;

let watchlist = externaldata (UserPrincipalName: string , DisplayName: string, Risk: int, Location: string) [h"https://..."] with (ignoreFirstRecord=true)

| where Risk > 90;

SigninLogs

| where TimeGenerated >= ago(timeRange)

…

// Exclude non-failure types

| where ResultType !in ("0", "50125", "50140")

// Include only watched users

| where UserPrincipalName in~ (watchlist)

…

The changes are:

- In green, As discussed, we now map all the fields in the reference file. Though we still don’t need the location field, it will be handy in the next exercise.

- In pink, the logic changes: first filtering the lookup table only for users with a risk higher than 90, and then changing the selection criteria in the query itself from “!in” to “in,” as now we want to match only on users in the list rather than exclude them.

- In cyan, cosmetic changes: changing the lookup table name from allow-list to watchlist and updating the comments.

You may notice that the watchlist table has 4 columns and ask how it is used in an “in” clause. When a table is used with the “in” clause, the first column is used, which is what we need here. If we had liked to check against a different column, we could have appended a “project” operator after the “where Risk > 90” phrase to select the field we want to match against:

let watchlist = externaldata (UserPrincipalName: string, DisplayName: string, Risk: int, Location: string) [h"https://..."] with (ignoreFirstRecord=true)

| where Risk > 90 | project UserPrincipalName

Enrichment and field extraction

Let’s look at an additional piece of information available in the lookup file: the user's location. Another option for making the failed login rule better is to alert only on failed login attempts by users originating not from their home country. To do that, we need to enrich the failed login event with information about the user’s home country.

This requires two significant additions to the toolbox we used to allow lists and watch lists:

- The country information is a part of a field value. We need to extract it to use it.

- To look up a user and enrich the event with the country information, the “in” operator would not. We need a more versatile method: the “join” operator.

Extract fields

Extracting the country code from the reference table is an excellent example of the versatility that externaldata provides. By making the reference file a native table, it enables further processing it.

For example, we can extract the country code from the location string using the extract function, which applies a regex to the Location field and assigns the identified part to the user_country calculated field:

externaldata (UserPrincipalName: string, DisplayName: string, Risk: int, Location: string) [h"https://..."] with (ignoreFirstRecord=true)

| extend user_country = extract ('Country": "(.*)"', 1, Location)

However, since the Location field is in JSON format, an alternative would be to use it as such. By changing the type of the Location field to “dynamic,” we can get user_country much more directly:

externaldata (UserPrincipalName: string, DisplayName: string, Risk: int, Location: dynamic) [h"https://..."] with (ignoreFirstRecord=true)

| extend user_country = Location.Country

Detection using the country value

Once the country code is available, regardless of the method, we can apply it to the events for detection purposes using the join operator:

let timeRange = 1d;

let userinfo = externaldata

…

let failed_singins = SigninLogs

…

failed_singins | join kind= inner (userinfo) on UserPrincipalName

| where Location != user_country

The join statement works as follows:

- The query assigns the label “userinfo” to the parsed reference table from the previous section.

- It then labels the original rule query as “failed_signins.”

- The “join” operator then enriches the failed_singins by matching the relevant userinfo records and appending all its fields to the failed sign-in event.

- Lastly, the “Location” of the original event is compared to the “user_country,” which is the user's home country and was appended in the previous step and alert only if they are different.

Hunting and investigation

To make reference tables easier to use interactively by analysts, you can save the externaldata query as a function. This way, it can be used in any of the use cases above without needing to include the URL or the parsing clauses directly. So, for example, if the last externaldata query above is saved as the function "userinfo," the "join" statement could have used userinfo without explicitly defining it in the query itself.

Additional options for maintaining a reference table

Using a workspace table as a reference table

Sometimes it is more convenient to use a custom log table in the workspace as a lookup table. Any method to ingest data to a custom table would work to populate it. A simple method is using a PowerShell script, in this case, to upload the same file as a custom table, facilitating the same use cases described above:

Import-Csv .\user_list.csv | Upload-AzMonitorLog.ps1 -WorkspaceId '…' -WorkspaceKey '…' -LogTypeName 'users_lookup'

The command uploads the file to a table called user_lookup_CL (CL is always appended to custom log tables).

There are two tweaks to keep in mind when using a custom table:

First, since you cannot delete data, add to it. If you want to update records in the table, you need to upload the records again, creating duplicates. KQL arg_max operator can help:

let allowlist = users_lookup_CL | summarize arg_max (TimeGenerated, *) by UserName_s

While each user will have multiple records, the allow-list will include only the last record for each user based on the timestamp. This way, the allow-list table is a plug-in replacement for the externaldata implementation above.

Secondly, since analytic rules can inspect only data from the last 14 days in a table, you may need to refresh the custom table periodically. The simplest solution is a scheduled Logic Apps workflow that will read the table and write the data back to the same table.

Using a KQL function with the datatable operator as a reference table

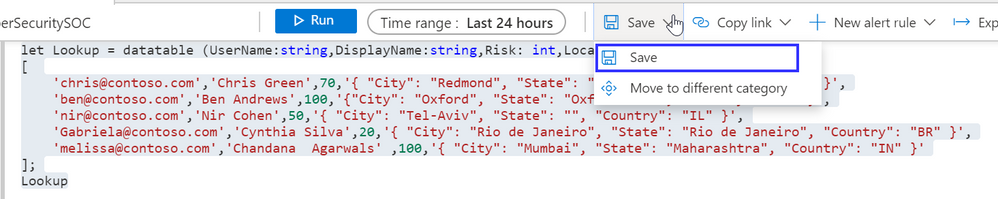

Another option is to use KQL functions utilizing the datatable operator as reference tables. To create the same lookup used throughout this article, type in the "Log" screen the following query:

let Lookup = datatable (UserName:string,DisplayName:string,Risk: int,Location:dynamic)

[

'chris@contoso.com','Chris Green',70,'{ "City": "Redmond", "State": "Washintgon", "Country": "US" }',

'ben@contoso.com','Ben Andrews',100,'{"City": "Oxford", "State": "Oxfordshare", "Country": "UK" }',

'nir@contoso.com','Nir Cohen',50,'{ "City": "Tel-Aviv", "State": "", "Country": "IL" }',

'Gabriela@contoso.com','Cynthia Silva',20,'{ "City": "Rio de Janeiro", "State": "Rio de Janeiro", "Country": "BR" }',

'melissa@contoso.com','Chandana Agarwals' ,100,'{ "City": "Mumbai", "State": "Maharashtra", "Country": "IN" }'

];

Lookup

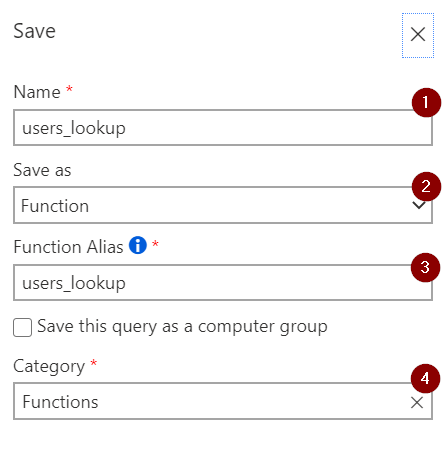

- Choose "Save as" function (2)

- Choose in "Function alias" (3) the name that will be used to refer to the table in KQL.

- The name and category (1 and 4, respectively) are less relevant and are used to specify the location in the query explorer.

You can also use an API to create and update KQL stored functions, and this way, automate lookup table management.

Now you use "users_looup" in the lookup queries presented in this article:

let allowlist = users_lookup | project UserName

let watchlist = users_lookup | where Risk > 90

Which one should I use?

We presented three methods to manage the lookup table. While the actual use in queries is essentially identical, the methods differ regarding the lookup table features: size, cost, performance, update options, and security. The following table provides a comparison:

| Attribute | Watchlists | KQL function | Custom table | Externaldata | ||||

|---|---|---|---|---|---|---|---|---|

| Table size | Small | CSV file limited to 3.8MB | Small | A query, including the function, is limited to 2MB. | Virtually unlimited | Large | ||

| Cost | Free | Free | Higher | Sentinel (and Log Analytics) ingestion cost. Note that data might need to be periodically re-ingested to allow use in Analytic Rules. | Low | Azure Storage cost. While there is both storage and read cost, the latter per lookup operation, the total is usually minimal. | ||

| Supported data | Only CSV | Multiple formats | Multiple formats | Multiple formats | ||||

| Performance | Fastest | Fastest | In memory | Fast | Log Analytics tables are cached. | Slower | Additional network latency and dependent on the choice of storage technology | |

| Automatic update | Coming soon | With the Watchlists API | Supported | Function update API | Supported | The Log Analytics Data Collector API and any custom connector option using it. | Supported | The Azure Storage API |

| Manual update | Coming soon | Limited | By editing in the "log" screen in Sentinel. | No | Yes |

Blobs - text editor in the portal Tables - table editor in the portal Files - Any desktop app using Desktop Sync |

||

| Read Access control | Any SOC user | Any SOC user | Any SOC user can read the content of a function. | Any SOC user | Lookup information is accessible to those with workspace access. | Any SOC user | Access control to the table in Azure Storage can be managed in detail. However, the table is indirectly accessible to anyone with workspace access who holds the SaaS key. | |

| Write Access control | Any SOC user | Can be fine-grained | Permissions for updating a function can be granularly set. | Any SOC user | The workspace ingestion key is needed to update the data. | Can be fine-grained | Using Azure Storage permissions | |

| Cross Workspace support | No | No | Yes | Yes | ||||

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.