- Home

- Azure

- Azure Observability

- Re: Event Log - Windows Services

Event Log - Windows Services

- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

Jun 06 2019

01:35 AM

- last edited on

Apr 07 2022

05:50 PM

by

TechCommunityAP

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jun 06 2019

01:35 AM

- last edited on

Apr 07 2022

05:50 PM

by

TechCommunityAP

Hello guys,

I'm a rookie on Log Analytics, that's why I followed these interesting posts of @Stanislav Zhelyazkov :

- URL1

- URL2

However it does not meet completely my need. All night my Azure VMs are shut down, at differents hours according to project. As you know, Shut down generates Windows services stopped event.

I would like to exclude these events with my query.

I tried to join Event with HeartBeat, and compare TimeGenerated with LastHeartBeat or set value=1 when VM are up. But in spite of my effort, it doesn't works ...

Is it possible to do this only with Kusto query ? And Do you have some examples ?

My last query :

- Labels:

-

Azure Monitor

-

Query Language

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jun 06 2019 02:07 AM

I didn't understand in the Hearbeat part why you aggregate twice on Computer one after the other. Consider using the extend operator instead.

Other comments:

- Prefer using "has" and not "contain" where possible

- You can use "let" to set up the constants in the query.

- You might want to use "kind=leftouter" in your join to handle cases of no Heartbeat.

Hope it helps,

Meir :>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jun 06 2019 04:37 AM

@Meir Mendelovich thank you very much for all these tips, certainly I will improve my syntax.

However, I think it's more a logical problem... Both queries ( Event & HeartBeat ) don't share the same temporality. The first one, filter only on my specific services event. The second part determine if my VM is online or not ...

In conclusion, when my VM is online all my events are set to 1 and I can't dissociate real stopped event, and events generated by our shut down.

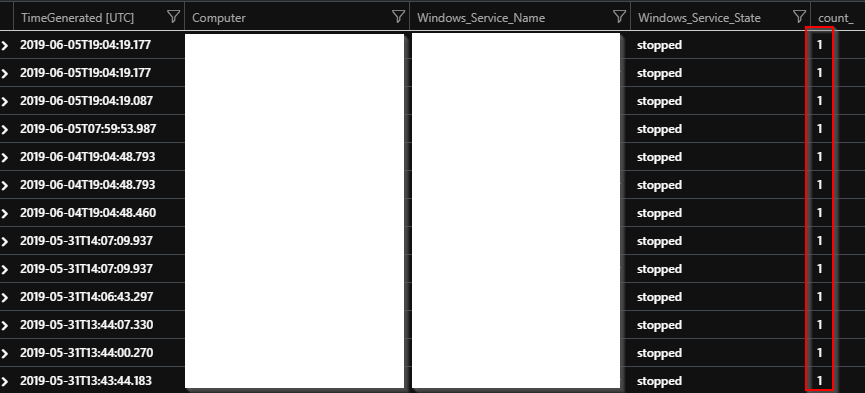

Result :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jun 06 2019 05:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jun 06 2019 06:28 AM

@Meir Mendelovich Sorry for misunderstanding.

In fact I would like to monitored stopped events for particular services but also exclude these events when they are generated just after a shut down.

Because, it's just a normal behaviour and not due to an issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jun 07 2019 02:41 AM

Hello guys,

I drew a schema in order to explain my purpose :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jun 10 2019 12:37 AM

SolutionHi,

Sorry for not being able to respond earlier here and on my blog. I do not think the case that you want to achieve is possible with just queries. In any case I think even when you create the query it might result in false positives and if it happens regularly it will beat the purpose. I think the most logical thing to do is to have some automation that disables alerts when maintenance window starts and enables them when it finishes. If you have machines in different maintenance windows it will be best if you create alerts for each group as you can enable/disable them independently. You may also have a function that represents a static or dynamic list of all machines. You alert query will filter on that list. Before maintenance window starts you update that query via some automation to remove the machine that are in that windows and once the windows stops you return them back by updating the function. The latter scenario I haven't tested as until recently for Log Analytics alerts there was message (in the docs) that functions are not supported of being used in alert queries. That message seems to be removed but it is unknown if this is due to adding that support or just the documentation was updated.

I hope this provides you with clear path.

Also keep in mind that the article was published when Azure Change tracking solution was tracking start and stopped services every 30 mins at minimum. The current release allows you to lower that to 30 seconds. In case you need faster data on those events I would suggest using the Change tracking solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 13 2019 11:56 AM

Hello Stanislav,

Please excuse the delay in replying. Thank you very much for your answer. It's very clear and complete.

Now thank to you, i have a method to reach my goal.

Have a nice day,

Nicolas,

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jun 10 2019 12:37 AM

SolutionHi,

Sorry for not being able to respond earlier here and on my blog. I do not think the case that you want to achieve is possible with just queries. In any case I think even when you create the query it might result in false positives and if it happens regularly it will beat the purpose. I think the most logical thing to do is to have some automation that disables alerts when maintenance window starts and enables them when it finishes. If you have machines in different maintenance windows it will be best if you create alerts for each group as you can enable/disable them independently. You may also have a function that represents a static or dynamic list of all machines. You alert query will filter on that list. Before maintenance window starts you update that query via some automation to remove the machine that are in that windows and once the windows stops you return them back by updating the function. The latter scenario I haven't tested as until recently for Log Analytics alerts there was message (in the docs) that functions are not supported of being used in alert queries. That message seems to be removed but it is unknown if this is due to adding that support or just the documentation was updated.

I hope this provides you with clear path.

Also keep in mind that the article was published when Azure Change tracking solution was tracking start and stopped services every 30 mins at minimum. The current release allows you to lower that to 30 seconds. In case you need faster data on those events I would suggest using the Change tracking solution.