- Home

- Azure

- Azure Observability

- Re: Azure Virtual Machine runtime calculation

Azure Virtual Machine runtime calculation

- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

May 08 2018

06:50 PM

- last edited on

Apr 07 2022

04:59 PM

by

TechCommunityAP

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 08 2018

06:50 PM

- last edited on

Apr 07 2022

04:59 PM

by

TechCommunityAP

Hello All,

I currently have an azure automation runbook that executes a get-azurermvm command against all the subscriptions in our tenant. This script compiles a table, that lists the vmSize and Status of running / deallocated as well as other pertinent information.

The out put is then formatted to JSON and posted to the log analytics Rest API where I have a custom Log called RunningVMs_CL

What I would like to be able to do is calculate any VM running more than 8 hours and up to 40 hours and be able to alert on it when it reaches above 8 hours of runtime per day or more, and then when it reaches 40 hours of total run time. The 40 hours might be a bit difficult to check as logs are only 31 days old at max.

Being new to Log Analytics language I'm struggling to find the right commands to use to facilitate at least the 8 hour calculation, any tips on how I should approach this query?

Thanks

john

- Labels:

-

Azure Monitor

-

Query Language

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 08 2018 06:58 PM

The fields I have to work with on this are

Location_s - string / region name

State_s - string / status of the vm / running or deallocated

type_s - string / hardwareprofile.vmsize (e.g. nv24, f2, etc)

timegenerated - automatically created during ingestion of the log

vmname_s - string / vmname

there are other fields, but not relevant to the query needed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 13 2018 09:12 AM

My organization is just now starting with Log Analytics and I've been looking at how we can use it to measure out Azure VM utilization. In particular, we'd like to check for any machines that might be very underutilized.

I started with just looking at the normal things like Processor, Memory, Disk usages. Comparing that to the hardware profile of VM might be interesting.

Maybe some other folks can share what they have done in this space?

Also, is there an easy way to get the schema of virtual machines or really any of the Log Analytics name spaces?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 14 2018 07:36 AM

Hi John,

The exact query depends on your specific table structure, which I don't have (I see you provided the field names but I need access to the actual table to create a working example).

I've created an example query based on the Heartbeat table, you can adjust it to meet your custom logs:

Heartbeat | where TimeGenerated > ago(7d) | summarize heartbeats_per_hour=count() by bin(TimeGenerated, 1h), Computer | extend state_per_hour=iff(heartbeats_per_hour>0, true, false) | summarize total_running_hours=countif(state_per_hour==true) by Computer | where total_running_hours > 8

you can also run it on our demo environment.

basically, this query finds computers that have been running for more than 8 hours (total) over the last 7 days. I am not sure why 40 hours would be more complicated, can you explain what you meant?

HTH,

Noa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 14 2018 07:45 AM

Noa,

Thanks for this I'll adjust it for my table.

The 40 hour requirement is a lifetime calculation of the VM runtime. The customer I'm working with is providing a SaaS app "evaluation" to a customer which they only want to allow them to use it NO more than 40 hours total. It's likely that they'd reach this in a week, but it's also likely they'd reach it in 40 days if they only use the VMs for an hour a day...Log analytics keeps data for 31 days? so if I calculated off of the data retained there could be a scenario where the usage is 31 hours and never reaches 40.

john

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 14 2018 08:33 AM

Hey John,

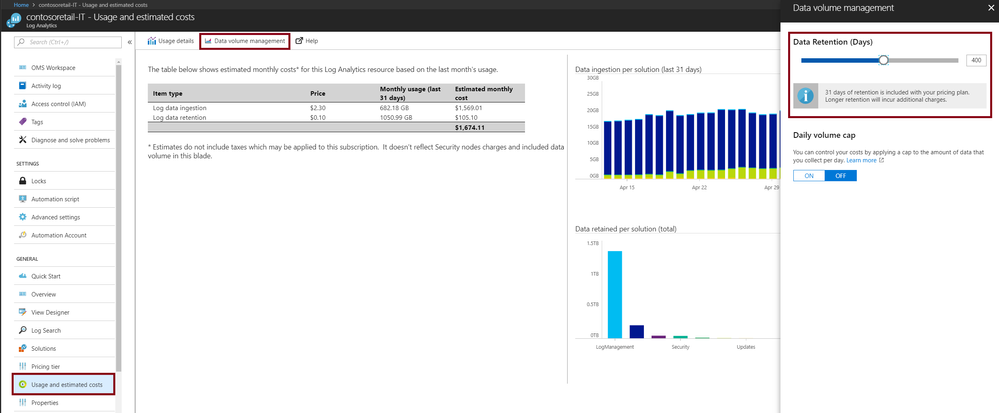

Retention is up to you. To configure, "Usage and estimated costs" on the Log Analytics workspace menu, and in it "Data volume management":

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 14 2018 10:19 AM

Thanks Noa! Did not know that functionality was added to the portal...greatly appreciated!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 15 2018 01:26 AM

Great! you can read on changing retention here and make sure you can easily manage your costs without concerns.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 09 2023 08:58 PM

I am new to the queries, can you please help me get the hours for entire Month or selected time period.

basically i would like to know how many hours the VM was running in a given Month or day or selected time period