- Home

- Azure

- Azure Migration and Modernization Blog

- Migrate Kubernetes workloads running on VM’s using Azure Migrate – Planning & Execution

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This article explains the steps carried out in doing a lift and shift migration of Kubernetes workloads running on virtual machines (from any location – Onpremise or Third-party cloud provider) to Azure public region. This migration was tested at a customer side, where they had specific requirements to migrate the Kubernetes workloads as is by retaining their IP addresses.

As services like docker, weaver etc are manually installed on the VM to set up Kubernetes cluster, there were different IP addresses used at different layers of the application (within the VM) and migrating them manually to “Azure public region” is a huge task which itself will take 6-8 months’ time (as per effort estimation).

We decided to leverage Azure Migrate service by doing a P2A (Physical to Azure), keeping in mind that this migration will be unique for two reasons!

- The Virtual Machines were running Kubernetes workloads and all kinds of databases like Postgres, MySQL, Mongo DB etc.

- IP retention was a prime requirement for the customer as they had certificates bound to the Load Balancer IP.

Ofcourse, there was option to modernize the application by moving the workloads to AKS, but due to a strict migration timeline, we decided to migrate as-is and later focus on Modernization. This article, therefore is intended for doing an effective planning and migration of Kubernetes workloads running on VM’s as is to Azure.

Abstract:

Customer had ~700+ Virtual machines running Kubernetes workloads from two environments (Prod and Non-prod) on two separate networks. As IP retention being the key requirement, we cannot migrate the workloads in phases just like how a normal workload migration is planned.

Due to IP overlap issues, we cannot attach two networks of same CIDR range in a network. Hence, one entire network (prod or non-prod) running in on-premises must be migrated (failed over) completely at the same time, so that once the failover is performed, the network from on-premises could be terminated and the new network in Azure could function without any DNS conflicts.

We migrated each environment in just a day and ensured that all the servers had their IP retained and their Kubernetes workloads started working exactly the same manner as how it used to work from on-premises or source location. Planning of the migration (including replication time) etc. went for 3 weeks and we migrated each environment over a weekend that included functional testing as well.

Current Architecture

- Customer has two environments – Dev and QA. Each Environment is placed on separate networks and has approximately 350 VM’s in it.

- The on-prem networks are connected a Hub network in Azure through Express route gateway.

- Inventory list contains a combination of Databases (Mysql, Kafka, Mongo, Redis), manually built Kubernetes VM's, App servers etc.

- Kubernetes built on VM's contains a primary NIC and they also contain additional internal Ips associated to Load balancers, docker service etc.

- Customer wanted to retain the VM IP’s post migration because of the following reasons.

- If the Kubernetes Load balancer IP changes then, then you need to re-generate and update all certificates bound to the Load balancer IP address.

- Back-end services needs to be reconfigured due to change of IPs at enterprise LB.

- New port opening requests for each VM on the firewall must be requested and it’s a huge effort.

- Mock test with every individual application team since IPs are changing.

- Possibility of DNS changes for all hosted intranet sites if Load balancer IP changes.

- Need to modify in Azure Devops pipeline, since IPs are changing.

Steps followed in Migration and Planning:

The important aspect of this migration is the way it is planned and executed. Since the IP’s had to be retained, the critical factor is that on the day of the migration, all the 350 servers belonging to various application categories must complete the replication without any sync issues. Even if a few machines show up with sync issues, then the entire migration takes a hit. Hence, planning of the migration is very important for a successful migration. There must be proper co-ordination between the Migration engineers, Application Team, Database team and Network Engineers.

We followed a P2A migration approach by deploying Config Servers & Process servers in On-premises.

Planning:

- We used Azure Migrate to perform this migration. As the volume of servers is huge, we had to build at least 4 migration projects and followed a P2A approach for one environment of 350 Servers (eg: Dev environment).

Eg: Built 4 Migration projects in Azure Migrate (eg: Project 1, 2, 3, 4) in target subscription.

- Deployed a config a server for each project (Config Server A, B, C, D) in on-premises with as per Microsoft recommendations.

- Deployed scale-out process server to support migration for each config server (Process server A, B, C, D). This is optional.

- Our idea is to replicate approx. 80 server per project or per config/process server.

- Created 4 storage accounts in target subscription in Azure region for each project.

- Installed Unified agent on all config servers and the process server agent on all process servers. After following the best practices, we set up replication in batches for specific set of applications.

- Monitored the replication progress daily and report any errors or proactively.

- We discussed with the customer and fixed a date for downtime which was approximately 3 weeks from the start of replication. Even though replication didn’t take long enough we ensured we have enough buffer time to fix any unforeseen challenges.

- IMPORTANT: Customer created their own Application test plan and took all relevant screenshots of the number of PODS etc. before failover so that they can compare it post failover.

- While setting up target attributes in Azure migrate where you enter the information related to target VNET, subnet, Resource group etc, Azure migrate will pick up instances of Kubernetes workload and will automatically pull up the details of additional IP addresses associated to the VM.

Eg: As part of building Kubernetes workloads on VM’s, you configure multiple IP address range that gets consumed by Docker services, Weave services, LB’s configured inside the server etc. As this is a lift and shift migration, the entire IP addresses configured as part of setting up this Kubernetes will also be migrated as it is. Azure Migrate during replication understands that there are multiple IP addresses configured inside the OS and hence asks for configuring the same in Azure migrate tool for the target side.

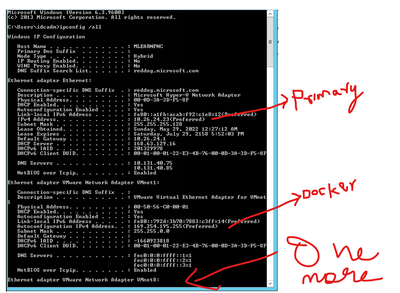

Other IPs configured as part of Kubernetes build is configured inside the VM and they belong to Docker services, Weave services, LB’s etc. This is the prime reason why customer wanted a lift and shift migration so that the IP addresses configured inside the OS will also be migrated as it is. i.e, if you simply type “ipconfig /all” on a Kubernetes deployed VM, inside the OS (on the source machine) you will see a primary IP address and a few other additional IP’s belonging to different range on a Kubernetes workload VM.

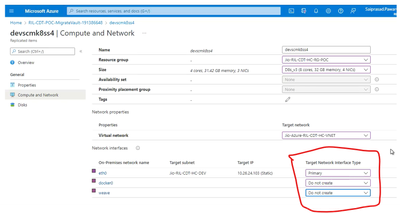

When you configure the target attributes, ensure you set the Primary NIC and provide the same IP address as that of source and make it static and also for remaining IP’s related to docker and weave, you set it to “Do not create” so that new NICS are not attached as secondary to the server. As mentioned above, these additional IP addresses are for various services deployed as part of Kubernetes and are configure in the OS and the same will be migrated as it is during lift and shift. Hence do not disturb these additional IP address settings while migrating Kubernetes VM workloads and leave it as “Do NOT create” so that new IP’s are not attached to it. Refer the screenshot below.

- Ensure the servers are assigned with a static IP address as like the source in the Azure "Compute and Network" properties since IP retention is the requirement.

- We discussed with the customer to follow an efficient migration/failover planning and hence differentiated the servers in different priorities.

Eg: Priority 1, 2, 3…1 means high priority and 3 less priority.

As the Kubernetes workloads depend on a common Image server, this server is considered a high priority (Priority 1) and hence this was failed over in the end.

- We discussed to failover servers in the sequence of 3,2,1 priority and came up with a schedule. Eg: Start failover for priority 3 servers at one time and then after few hours plan the next set of priority servers.

- During every migration schedule as defined above, customer will stop all the application and database related services on the respective servers.

- Roughly every priority set contained a minimum of 50-75 servers.

- We instructed the customer to disable the services on the servers so that, it won't automatically start post failover.

Eg: In Windows, the services will be in stopped state and Automatic will be disabled.

In Linux, we disabled Auto start (upon reboot).

- Priority 1 (set of servers) is the most dependent servers and used by many teams across the organization and hence considered the critical and therefore we did the failover in the last phase.

- Now, post failover when the server comes online, the services was started first on Priority 1 servers, followed by 2 and 3. This is because, some of the Kubernetes workloads share the common image server which was migrated in the last phase (belongs to priority 1). Hence, in order for the image to reflect properly on the workloads, the services in the Image server must be started first that will help the workloads to pull the images in from here.

- Until all the priority sets (3,2 1) are migrated, you cannot login to the VM’s through the VPN, because the target network is still not peered to the hub. But, you can still use Azure bastion and connect to the VM’s that are failed over and do a baseline check if you want. Reason for using Azure bastion for initial tests is explained under Network change Planning.

Network Change Planning:

One of customers prime requirement is that the IP addresses of the servers (Kubernetes workload VM’s) must be the retained post migration. As the volume of servers is huge (~350 servers per environment) and all of them belong to a same network, this network shift had to be carefully planned.

Now, since multiple VNETS with same IP address range cannot be peered to the one (IP overlapping issues), we must first disable the existing connectivity from on-premises to the hub network in Azure so that the new VNET (target VNET) from Azure region can be peered to it. On the day of migration as soon as the sync was completed across all priority servers (1,2 and 3), it was decided to perform a failover of all the 350 servers and then terminate the connectivity of source networks to the Hub and initiate a new peering from the new VNET (in Azure) where the servers are failed over to in Azure region.

NOTE : We cannot switch the network before the entire set of servers are failed over, because the sync is a continuous and on-going process and if terminated, the configuration server becomes unreachable, and you may not be able to login to it again and perform failover. Hence, the peering should be disabled only after the entire set of servers were migrated or failed over to newer region.

NOTE : If you temporarily want to login to the migrated servers, you can set up Azure Bastion because connection via the VPN will not be allowed until the target VNET is peered with the Hub network. Customer uses a VPN to connect to the servers and by default only the IP address range of the source network was allowed. Even though the target VNET also contained the same IP address range, this target VNET is not peered to hub yet (after just migrating one set of servers) and hence direct connection via VPN to the new servers will not work unless the on-prem connection is disabled and new peering connection is established. We don’t have to add the IP address range in the VPN for RDP as the address range is not changing post migration.

Hence, for RDP or SSH login to all the VM’s were possible only after the entire set of servers were migrated (or failed over) and peering connection restored from new region. So, we used a combination of Azure bastion and customer VPN to connect to the VM’s post migration. Azure bastion was primarily used to check the health of VM’s immediately after failover and VPN was used after entire set of servers from all priority set was migrated.

On the Migration Day,

- Create recovery plan group in batches with each batch containing min 10-15 servers only. Recovery plan helps in auto failover of a set of VM’s configured in it so that failover need not be done individually on each server, and they run concurrently. NOTE: Recovery plan created in the vault will failover all the VM’s asynchronously and do not wait for one another to complete. If you are looking for a sequence for failover. Then create multiple recovery plans and trigger one by one.

- Ensure the application or database services are in stopped state on all source VM’s and Auto start disabled on them prior to failover.

- Ensure that the configurations are set properly on each VM so that they failover to the right RG and right VNET and right IP address. Secondary IP addresses are set to “Do Not Create” in the Azure migrate portal.

- Once failover of a particular set of VM is done and shows successful, immediately stop all the servers in Source region.

Post Failover

- Terminate the connectivity from source network to HUB, and peer the new VNET in the target region (with same IP range) to the hub network.

- Login to VM’s (RDP/SSH) and via customer VPN and ensure the login is smooth.

- Turn ON the Application and DB services from all servers based on the priority.

- Ensured that the servers are reachable like before. Eg: Kubernetes workload VM’s were able to fetch the images from the Image server.

In a workload migration project, an effective planning always helps minimize the post migration issues. Having a general understanding of the network architecture of the target platform also helps. Happy Journey to Cloud !!!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.