- Home

- Customer Advisory Team

- Azure Global

- Using BeeGFS storage pools to provide flexible performance and data persistence

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

On Azure there are performance and cost advantages by deploying a BeeGFS parallel filesystem using NVMe SSDs for storage and metadata (e.g. L8s_v2 SKU). NVMe SSD’s provide the superior throughput and IOPS advantages and can significantly reduce cost and complexity by utilizing the NVMe SSDs that come with the SKU (e.g. Lsv2 SKUs) and not have to configure, install and pay for additional data disks.

The primary disadvantage of using NVMe SSDs in your BeeGFS deployment is that these disks are not persistent, and you will lose your data once you stop/deallocate your BeeGFS filesystem. One approach to overcome this limitation is to use the storage pools feature of BeeGFS, which allows you to have mixed disks as part of your BeeGFS filesystem. The idea is to add cheap HDD managed disks to an NVMe SSD based BeeGFS parallel filesystem to provide data persistence. The details of how to deploy BeeGFS with NVMe and HDD using storage pools will be discussed below.

BeeGFS Storage pools architecture (NVMe SSD and HDD)

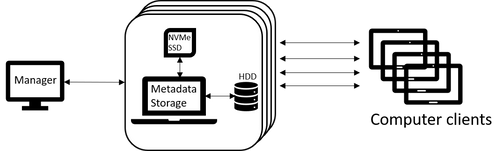

Fig. 1 BeeGFS Storage pools architecture, BeeGFS storage and metadata share an NVME SSD on each VM, each VM has a HDD data disk attached. There are two storage pools, the NVMe SSD storage pool for performance or I/O processing and the HDD storage pool for data persistence/back-up. The BeeGFS manager is only used for BeeGFS administration and can be deployed on a smaller VM.

Deployment

The AzureCAT HPC azurehpc repository will be used to deploy the BeeGFS storage pools architecture.

git clone git@github.com:Azure/azurehpc.git

We will be following closely the azurehpc beegfs_pools example (in azurehpc/examples/beegfs_pools).

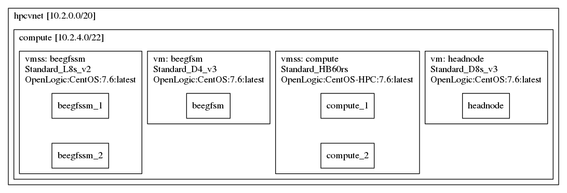

Fig 3. Details of the BeeGFS storage pools configuration using the config.json file in the azurehpc beegfs_pools example.

- Initialize a new azurehpc project, using the azurehpc-init command. (creates working config.json file)

-

$ azhpc-init -c $azhpc_dir/examples/beegfs_pools -d beegfs_pools -s

Fri Jun 28 08:50:25 UTC 2019 : variables to set: "-v location=,resource_group="

-

- Set config.json template with your desired values.

-

azhpc-init -c $azhpc_dir/examples/beegfs_pools -d beegfs_pools -v location=westus2,

resource_group=azhpc-cluster

-

- Create the BeeGFS storage pool parallel filesystem (NVMe SSD and HDD)

-

azhpc-build

-

- Connect to the BeeGFS master/manager node (beegfsm)

-

azhpc-connect -u hpcuser beegfsm

-

- Check that the BeeGFS storage pools are set-up and available for use.

-

$ beegfs-ctl --liststoragepools

Pool ID Pool Description Targets Buddy Groups

======= ================== =====

1 Default 2,4

2 hdd_pool 1,3 - The HDD disks can be accessed at the /beegfs/hdd_pools (i.e. storage pool 2) mount point and the NVMe SSD disks at /beegfs (i.e. storage pool 1).

-

Data Migration

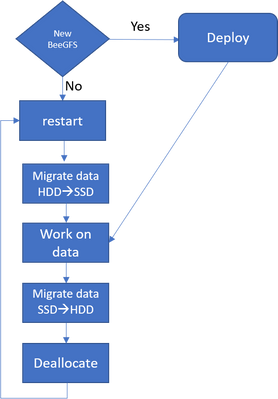

Fig, 2 BeeGFS storage pools data workflow. If the BeeGFS data is already available on HDD, then migrate it to the NVMe SSDs before doing any I/O processing.

Fig, 2 BeeGFS storage pools data workflow. If the BeeGFS data is already available on HDD, then migrate it to the NVMe SSDs before doing any I/O processing.

After I/O processing is complete, the data can be migrated to permanent storage (HDD) using the following procedure.

- Copy data to HDD permanent storage.

-

cp -R /beegfs/data /beegfs/hdd_pools

or -

beegfs-ctl –migrate –storagepoolid=1 –destinationpoolid=2 /beegfs/data

-

- Save/copy the beegfs metadata to beegfs storage/metadata VM’s permanent home directories

-

WCOLL=beegfssm "cd /mnt/beegfs;sudo tar czvf /home/${user}/beegfs_meta.tar.gz meta/

--xattrs"

-

- The BeeGFS parallel filesystem can now be stopped.

After the BeeGFS parallel filesystem is restarted the BeeGFS data can be migrated back to the BeeGFS NVMe SSDs with the following procedure.

- Restart the BeeGFS parallel filesystem

- Restore the BeeGFS metadata.

-

WCOLL= beegfssm "cd /mnt/beegfs;sudo tar xvf /home/${user}/beegfs_meta.tar.gz

--xattrs"

-

- Restore the BeeGFS storage data (to NVMe SSD’s)

-

cp -R /beegfs/hdd_pools/data /beegfs/data

or -

beegfs-ctl –migrate –storagepoolid=2 –destinationpoolid=1 /beegfs/data

-

Testing BeeGFS

The azurehpc repository contains a number of scripts to measure storage throughput and IOPTS using IOR and FIO benchmark codes, see azurehpc/apps/ior and azurehpc/apps/fio.

Conclusion

BeeGFS has a built-in feature called storage pools which allows a parallel filesystem to be deployed with different types of disks, creating pools of storage resources with different performance characteristics for flexibility. This feature can be utilized to provide an ephemeral based BeeGFS parallel filesystem with data persisted by adding low cost HDD data disks.

The deployment of BeeGFS storage pools has been automated in the AzureCAT HPC azurehpc repository. The checkpoint/restart of a BeeGFS parallel filesystem has been discussed and how the data can be migrated to/from NVMe SSD’d and HDD’s.

This solution provides the best of both worlds, fast performance of NVMe SSD’s with the cheap cost of persistent HDD’s.

References

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.