- Home

- Azure Data

- Azure Database for PostgreSQL Blog

- Azure Database for PostgreSQL : Logical Replication

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

With Azure Database for PostgreSQL Flexible Server (Shortened to Flex for this article), customers now have the option of using the standard PostgreSQL Native Logical Replication feature to implement data replication solutions. These solutions can be in either direction, for example, on-prem to Azure or vice versa. There are many scenarios that can take advantage of this feature, for example:

- One-time data migration from practically anywhere to Azure

- Ongoing Change Data Capture

- Replication between two separate Flex instances in different Azure regions for reporting, analytics or even disaster recovery purposes

- Customer managed multi-master replication between separate Flex instances or even on-prem to azure or vice-versa. Please note that data conflicts can happen, and you will need to manage these – be sure to read the docs (link below)

To get the most from this article it is probably a pre-requisite for you to have an intermediate to advanced understanding of PostgreSQL and knowledge of networking and security concepts. Here is a high-level logical diagram showing the PostgreSQL Logical Replication components:

One or more ‘Publications’ can be setup on a source database that wishes to be a publisher for tables. These can then be consumed by a subscriber database, by configuring a ‘Subscription’.

The official documentation link PostgreSQL Native Logical Replication provides details on the following;

- Architecture

- Configuration Settings

- Publications and Subscriptions

- Replication Slot Management

- Conflicts

- Restrictions

- Monitoring

- Security

This article will hopefully provide you with enough information on the core concepts involved in logical replication and should then allow you to adapt those learnings to your own environment, where you may already have the source database and existing tables with data. In this article I will focus on using logical replication to setup a selective one-way replication from an on-prem instance of a PostgreSQL database to a Flex instance on the Azure cloud in line with the diagram below:

Our Flex instance has been configured to use the Public Access networking option. We won’t cover the option of Private Access, however the same principles would apply to the database configuration to use PostgreSQL Logical Replication and all that would change would be the underlying networking configuration.

We are going to set this up so that all 4 tables in public schema of the the On-Premises server are replicated to the Flex instance but into the postgres database into its public schema. The source tables to be replicated are:

- public.pgbench_accounts

- public.pgbench_branches

- public.pgbench_history

- public.pgbench_tellers

You might notice these table names if you have ever used the standard PostgreSQL benchmarking utility pgbench. I am using that tool here to simplify the creation of tables and data.

At a high level these are the configuration steps necessary to tie this all together to produce a working example. Configuration of:

- Required source database settings

- Firewall – allow access to and from both source and target databases

- Schema objects and data

- Publications

- Subscriptions

Let’s get started….

PostgreSQL Settings

For logical replication to work, the source PostgreSQL instance must have the parameter ‘wal_level’ set to ‘logical’. You can check this by issuing the following command in psql or pgadmin:

show wal_level;

If it responds with anything other than ‘logical’ you will need to make that change in your PostgreSQL instance’s configuration file, typically postgresql.conf. On my Debian source system that file is located in /etc/postgresql/14/main directory. Other items to check when you are there are:

- listen_addresses (defaults to ‘localhost’ but you will need to change that and update pg_hba.conf to allow connections from Azure)

- port (defaults to 5432, but on hosts where you have many PostgreSQL clusters your specific database might be listening on a non-default port)

After making any necessary changes, you will need to restart your PostgreSQL cluster, on Debian I use the helper script like so:

- pg_ctlcluster 14 main restart

Once you have confirmed that your wal_level is now set to ‘logical’ you can proceed to the next step.

Firewall Settings

As mentioned above, I’m using public addressable networking for my Flex instance and not Private Networking with VNET. With either access method, you’ll need to allow traffic to flow in both directions between your databases:

- Azure to On-prem

- On-prem to Azure

There are certain times when configuring or modifying logical replication that the target database will initiate a connect to the source. You’ll have to ensure that your ‘on-premises’ PostgreSQL database is contactable from your Flex instance and vice-verssa when you complete the logical replication setup. Typically, this will involve setup of NAT and a firewall rule, do note that your on-prem PostgreSQL port number (default 5432) will need to be known to set this up (see the section below on logical replication ‘subscriptions’). On my on-prem server.

Setup of Database Objects & Data

As I mentioned earlier, I’m using pgbench to create both schema objects and data for this article to explain the whole process. If you already have your source tables with data, you can skip this step and simply create the same objects in the target database by either using pg_dump and pg_restore or simply running the DDL on the target database. I have pgbench installed on my on-prem database server. The steps to create the pgbench schema objects, which contains 4 tables, are straightforward for both source and destination databases.

On the source I ran this command:

postgres@deb:~$ pgbench -h 127.0.0.1 -s 10 -i -I dtpg -p 5436 -d mydb -U postgres

This tells pgbench to connect to local postgresql listening on port 5436, specifically the database ‘mydb’ and drop tables, create tables with primary keys and create data. Output should be similar to this:

dropping old tables...

creating tables...

creating primary keys...

generating data (client-side)...

1000000 of 1000000 tuples (100%) done (elapsed 0.03 s, remaining 0.00 s)

done in 0.29 s (drop tables 0.01 s, create tables 0.00 s, primary keys 0.01 s, client-side generate 0.27 s).

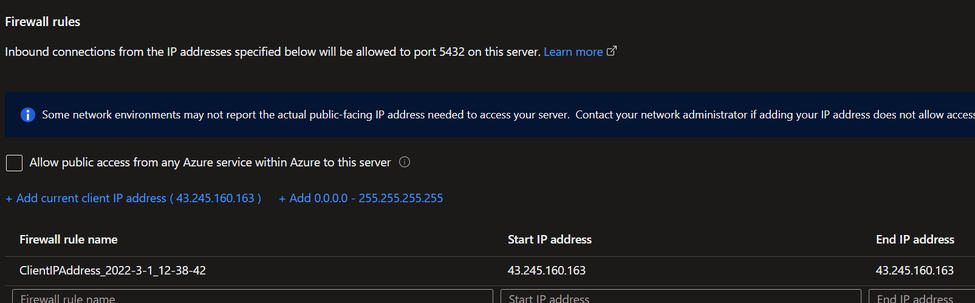

Moving on to my Azure Flex instance, it already allows access from my on-prem IP address as I added a rule for that:

This allows me to run this command from my on-prem server:

postgres@deb:~$ pgbench -h aue-flex13.postgres.database.azure.com -i -I dtp -p 5432 -d postgres -U dbadmin

This tells pgbench to connect to my flex instance, specifically the database ‘postgres’ and drop tables, create tables and primary keys but, importantly, not create any data for the tables. Output should be like this:

dropping old tables...

creating tables...

creating primary keys...

done in 0.20 s (drop tables 0.03 s, create tables 0.08 s, primary keys 0.09 s).

Now we have the tables matching between on-prem and Flex but there is data only in the on-prem ‘mydb’ database.

PostgreSQL Logical Replication - Publications

This step involves setting up a Logical Replication ‘Publication’ on your source database for the tables you want to publish. See the official doc PostgreSQL: Documentation: 30.1. Publication for more details on the options available. The creation of the publication can be performed with the individual commands below or it can be scripted if, for example, you want to publish a larger number of tables, or perhaps all tables from one or more schemas:

create publication mydbpublic;

alter publication mydbpublic add table public.pgbench_accounts;

alter publication mydbpublic add table public.pgbench_history;

alter publication mydbpublic add table public.pgbench_branches;

alter publication mydbpublic add table public.pgbench_tellers;

Note that setting up a publication does not start any replication.

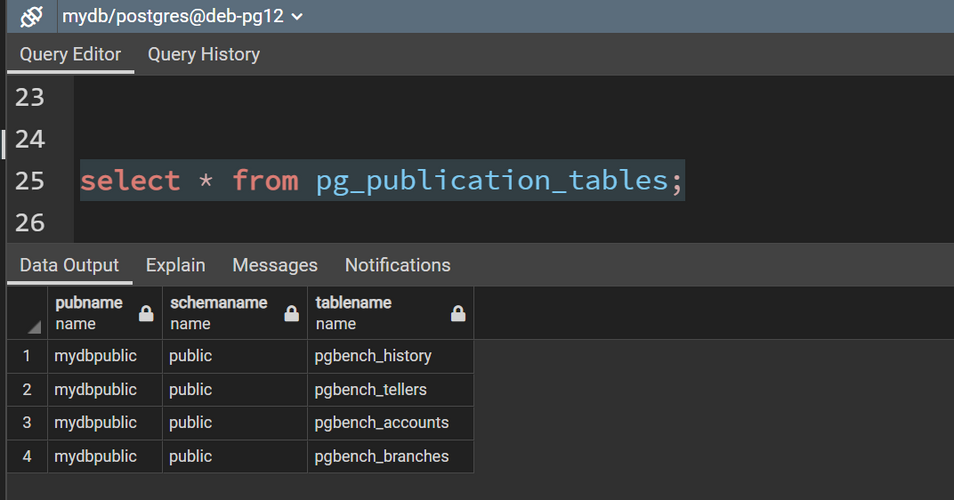

You can check on the publication configuration by using the following commands:

select * from pg_publication;

select * from pg_publication_tables;

Output should be similar the screenshots below:

PostgreSQL Logical Replication - Subscriptions

This step involves setting up a Logical Replication ‘Subscription’ on your target database for the tables you want to subscribe to. Here is the link to the official documentation PostgreSQL: Documentation:: 30.2. Subscription which explains more details and the options available.

Running this command (from psql, pgadmin or your favourite tool) will now finalize the setup and start the replication by default, it is possible to disable the initial sync:

create subscription sub_onprem_mydb_public connection 'host=dns.or.ip.of.yourserver port=yourport user=youruser dbname=postgres password=yourpassword' publication mydbpublic;

Note that executing the above command will lead to the target database (Flex in Azure) connecting to your source database with the details you provided. This means that network traffic will originate from an Azure managed IP address as described above.

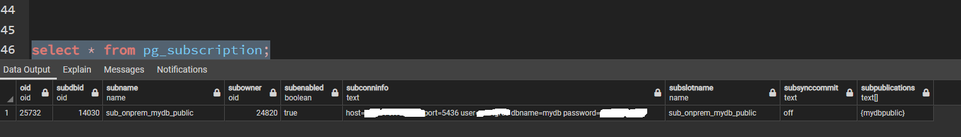

You can check on the publication configuration by using the following command:

select * from pg_subscription;

And you should see output like this for your subscription;

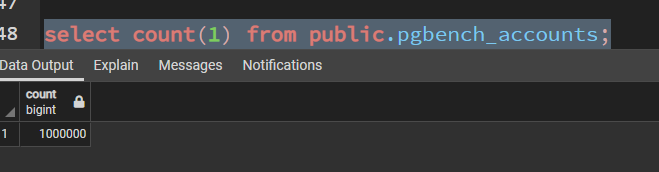

Now to the final test, did my data replicate from mydb on-prem to postgres in Azure ? Give it a couple of minutes and try running something like this on your target database:

select count(1) from public.pgbench_accounts;

Yes, all 1 million rows have now been copied from source to target as part of the initial sync that happens by default when you create the subscription.

Now that we have set it up and can see it working, how about we run some pgbench benchmarks and prove that the solution works under a bit of pressure? Running the command below on my on-prem server will execute a read/write benchmark on the source database:

postgres@deb:~$ pgbench -h 127.0.0.1 -p 5436 -j 8 -c 50 -d mydb -U postgres

…. SNIP ….

pgbench: client 12 receiving

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 10

query mode: simple

number of clients: 50

number of threads: 8

number of transactions per client: 10

number of transactions actually processed: 500/500

latency average = 40.184 ms

initial connection time = 180.443 ms

tps = 1244.288715 (without initial connection time)

How will I know the replication has worked ? Well, pgbench by default keeps a history of changes in the pgbench_history table, with a ‘delta’ column keeping a sort of transaction history. If you run something like this on both source and target databases the results should be identical:

select sum(delta) from public.pgbench_history;

First on the source;

And then on the target

Now is a good time to highlight some very important restrictions to consider. These are detailed in the PostgreSQL community docs here PostgreSQL: Documentation: 31.4. Restrictions and you should make yourself familiar with them.

Managing DDL Changes

It is probably a fairly common requirement to change some table structures at some point in time during an application’s lifecycle. It is important to note that Logical Replication will not replicate any DDL changes in either direction. For example, if you want to make a table definition change, like adding a column, this will break the replication. Perhaps the best way to manage this is to first stop the replication by using the ALTER SUBSCRIPTION command E.g.:

alter subscription sub_onprem_mydb_public disable;

This has the effect of pausing replication. Once complete you can alter the target table and add the column, then alter the source table and add the column. After that you can re-enable replication:

alter subscription sub_onprem_mydb_public enable;

Managing Conflicts

I mentioned Multi-Master replication in the intro but would like to touch on it a little more. There is nothing to stop you from designing, implementing and testing a setup like this where your application can use both databases and write to both databases, and even the same tables on these databases. It is important to note that PostgreSQL will not stop you from doing that, but should any conflict arise, replication will stop until you resolve the conflict. Details here PostgreSQL: Documentation: 31.3. Conflicts

Summary

Hopefully this article has helped you understand what PostgreSQL Logical Replication is and what it can and can’t do and provided some food for thought when either migrating to Azure Flexible Server or designing a new multi-master database for a business critical application.

References

Here is the link to the Azure documentation for Logical replication and logical decoding - Azure Database for PostgreSQL - Flexible Server | Microso...

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.