- Home

- Azure Data

- Azure Data Factory Blog

- ADF Adds Support for Inline Datasets and Common Data Model to Data Flows

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure Data Factory makes ETL even easier when working with corporate data entities by adding support for inline datasets and the Common Data Model (CDM public preview connector). With CDM, you can express common schemas and semantics across applications. By including CDM as a source and destination format in ADF's ETL data flows engine, you can now read from CDM entity files, both using manifest files and model.json. Likewise, you can write to CDM's manifest format by using an ADLS Gen2 Sink in your ADF data flow.

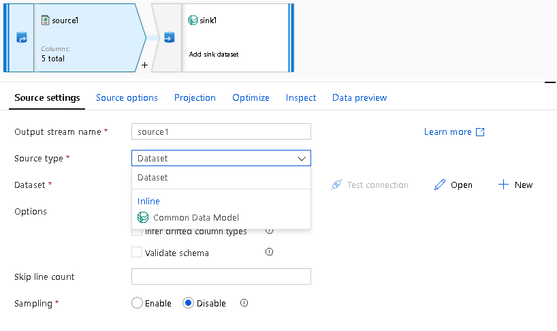

The way that we're exposing the CDM format to your ADF Data Flow source and sink transformations is via the new inline dataset option. Previously, ADF required you to create or use an existing dataset, which is a shared entity across an entire factory. But now data flows allow you to define your source and sink formats inline in the transformation without requiring a shared dataset.

There is a new selector on data flow source and sink transformations for "Type". The default is "Dataset", which is the most common use case and you will not have to change anything for existing data flows. You follow the same workflow of creating or choosing a shared dataset for your source and sink.

CDM is the first new ADF format to support inline datasets only. Choose "Common Data Model" from the list of inline types and then pick ADLS Gen2 as your Linked Service, where your CDM entity files are located. ADF fully supports all of the rest of the data flow transformation logic which you can use in your flow.

NOTE: When using model.json source type from Power BI or Power Platform dataflows and you encounter "corpus path is null or empty" errors, it is likely due to formatting issues of the partition location path in the model.json. Here is how to fix it:

1. Open the model.json file in a text editor

2. Find the partitions.Location property

3. Change "blob.core.windows.net" to "dfs.core.windows.net"

4. Fix any "%2F" encoding in the URL to "/"

You can mix and match linked service and dataset types, too. This way, ADF allows you to have, for example, an Azure SQL source database, then dedupe some of the table data with an Aggregate transformation, and then finally sink those transformed rows into CDM partitions. Likewise, you could take an existing set of CDM entities and load those into a SQL Database after transforming the incoming entity data.

You'll notice that the Source and Sink settings are more complex when using an inline dataset. This is because you are not using a shared configuration (dataset) and so we need to capture details about your CDM entity file locations and corpus settings for CDM formats directly in the transformation. As more formats are added to the inline dataset option, the source and sink settings properties will change depending on the type of format you selected. Note that with the CDM format, ADF expects the entity reference to be located in ADLS Gen2 or Github. So you will select the appropriate Linked Service to point to the entity files.

When using inline datasets, you may want to import the target schema as you do with Import Schema in ADF Datasets. This is available to you inside the Source & Sink directly in inline mode.

In this use case above, I'm using a CDM sink format type so the CDM entity definition includes a rich set of schema semantics that can be read by ADF and used for your mappings and transformations. I click on Import Schema to load the columns and data types into my sink mapping and then View Schema so that I can set schema drift or validate schema as well as view the columns defined in my target entity schema.

- « Previous

-

- 1

- 2

- Next »

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.