- Home

- Azure Data

- Azure Data Factory Blog

- Load different SQL database tables based on different file types in ADF

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

A very common scenario in data lakes is that you will receive files that land in different types and shapes and you need to load those into database tables.

To accomplish this in an automated, operationalized manner, you will need an ETL process to ingest those files and load them correctly into the proper SQL database table.

With ADF, you can set either polling via scheduled or window triggers. Or you can use event-based file arrival triggers to trigger a pipeline with a data flow.

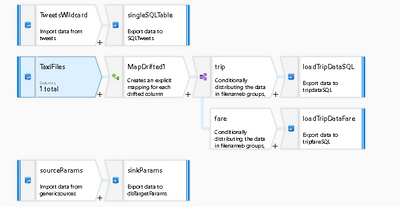

In this case, data flow is used with many different sources, reading from multiple folders with wildcards, and landing the data into different SQL tables based on rules-based criteria.

If you need to pick-up many different files in a source Blob or ADLS folder, you can use wildcards (*Tweets.csv) with no schema defined in the dataset. Set "Allow schema drift" in your source & sink and then create an on-the-fly SQL database table. That is shown in the video and diagram above in the top stream. The sink dataset has no schema defined for the SQL table. The table is defined and generated on the fly based on the source metadata and auto-mapping is set in the Sink.

The 2nd example stream in this data flow uses wildcarding to pick-up 2 files: {trip_data_1.csv, trip_fare_1.csv}. By using the "Column to store file name" property in the source, ADF will maintain the name of the file along with the row data. This lineage allows you to use a Conditional Split transformation based on the file name so that you can load the fare data into the fares SQL table and the trip data into the Trip Data SQL table using 2 different sink transformations.

Finally, the 3rd example uses parameters. In this case, I have a data flow parameter that stores the name of the incoming filename and a dataset parameter that defines the name of the table that I wish to load with no schema. I am using Auto Mapping in the schema to keep this flow generic and reusable.

In all of these cases, because I need to search and iterative many files in the source, I have simple, generic source datasets that only define the Linked Service credentials to my storage account and a blank dataset that only points to my storage container. The wildcarding handles the path and filename resolution.

When you execute this data flow as a single data flow activity in an ADF pipeline the entire set of 3 streams will all run together on a single Spark execution at scale with no extra effort on your part.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.