- Home

- Azure

- Azure Compute Blog

- Accelerated Networking on HB, HC, HBv2, HBv3 and NDv2

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure Accelerated Networking is now available on the RDMA over InfiniBand capable and SR-IOV enabled VM sizes HB, HC, HBv2, HBv3 and NDv2. Accelerated Networking enables Single Root IO Virtualization (SR-IOV) for a VM’s Ethernet SmartNIC resulting in enhanced throughput of 30 Gbps, and lower and more consistent latencies over the Azure Ethernet network.

Note that this enhanced Ethernet capability is still additional to the RDMA capabilities over the InfiniBand network. Accelerated Networking over the Ethernet network will improve performance of loading VM OS images, Azure Storage resources, or communicating with other resources including Compute VMs.

When enabling Accelerated Networking on supported Azure HPC and GPU VMs it is important to understand the changes you will see within the VM and what those changes may mean for your workloads. Some platform changes for this capability may impact behavior of certain MPI libraries (and older versions) when running jobs over InfiniBand. Specifically the InfiniBand interface on some VMs may have a slightly different name (mlx5_1 as opposed to earlier mlx5_0) and this may require tweaking of the MPI command lines especially when using the UCX interface (commonly with OpenMPI and HPC-X). This article provides details on how to address any observed issues.

Performance with Accelerated Networking

Performance data with guidance on optimizations to achieve higher throughout of up to 38 Gbps on some VMs is available on this blog post (Performance impact of enabling AccelNet). Scripts used are also available on GitHub.

Enabling Accelerated Networking

New VMs with Accelerated Networking (AN) can be created for Linux and Windows. Supported operating systems are listed in the respective links. AN can be enabled on existing VMs by deallocating VM, enabling AN and restarting VM (instructions).

Note that certain methods of creating/orchestrating VMs will enable AN by default. For example, if the VM type is AN-enabled, the Portal method of creation will, by default, check "Accelerated Networking"

Some other orchestrators may also choose to enable AN by default, such as CycleCloud which is the simplest way to get started with HPC on Azure.

New network interface:

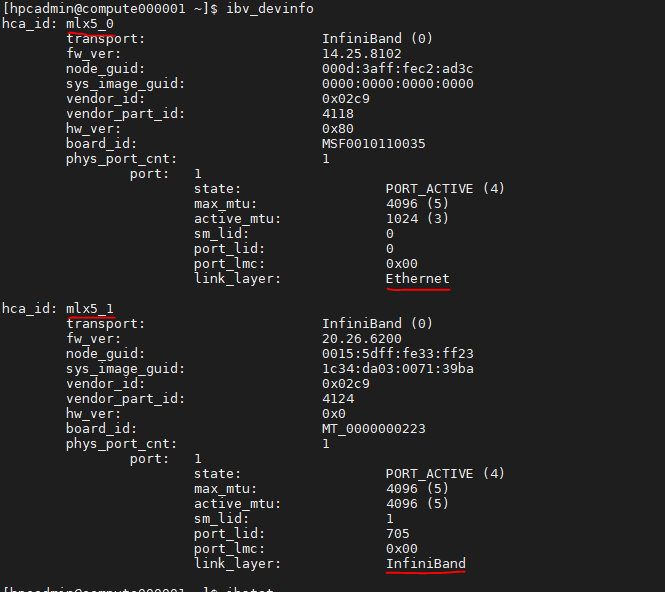

As the Ethernet NIC is now SR-IOV enabled, it will show up as a new network interface. Running lspci command should show an additional Mellanox virtual function (VF) for Ethernet. On an InfiniBand enabled VM, there will be 2 VFs (Ethernet and InfiniBand). For example, following is a screenshot from Azure HBv2 VM instance (with OFED drivers for InfiniBand).

Figure 1: In this example, "mlx5_0" is the ethernet interface, and "mlx5_1" is the InfiniBand interface.

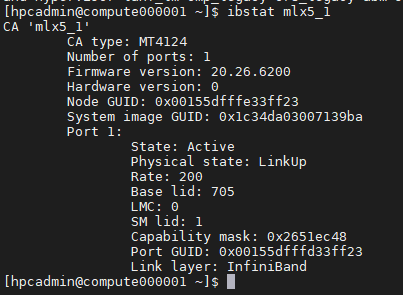

Figure 2: InfiniBand interface status (mlx5_1 in this example)

Impact of additional network interface:

As InfiniBand device assignment is asynchronous, the device order in the VM can be random. i.e., some VMs may get "mlx5_0" as InfiniBand interface, whereas certain other VMs may get "mlx5_1" as InfiniBand interface.

However, this can be made deterministic by using a udev rule, proposed by rdma-core.

$ cat /etc/udev/rules.d/60-ib.rules

# SPDX-License-Identifier: (GPL-2.0 OR Linux-OpenIB)

# Copyright (c) 2019, Mellanox Technologies. All rights reserved. See COPYING file

#

# Rename modes:

# NAME_FALLBACK - Try to name devices in the following order:

# by-pci -> by-guid -> kernel

# NAME_KERNEL - leave name as kernel provided

# NAME_PCI - based on PCI/slot/function location

# NAME_GUID - based on system image GUID

#

# The stable names are combination of device type technology and rename mode.

# Infiniband - ib*

# RoCE - roce*

# iWARP - iw*

# OPA - opa*

# Default (unknown protocol) - rdma*

#

# Example:

# * NAME_PCI

# pci = 0000:00:0c.4

# Device type = IB

# mlx5_0 -> ibp0s12f4

# * NAME_GUID

# GUID = 5254:00c0:fe12:3455

# Device type = RoCE

# mlx5_0 -> rocex525400c0fe123455

#

ACTION=="add", SUBSYSTEM=="infiniband", PROGRAM="rdma_rename %k NAME_PCI"

With the above udev rule, the interfaces can be named as follows:

Note that the interface name can appear differently on each VM as the PCI ID for the InfiniBand VF is different on each VM. There is ongoing work to make the PCI ID unique such that the interface is consistent across all VMs.

Impact on MPI libraries

Most MPI libraries do not need any changes to adapt to this new interface. However, certain MPI libraries, especially those using older UCX versions, may try to use the first available interface. If the first interface happens to be the Ethernet VF (due to asynchronous initialization), MPI jobs can fail when using such MPI libraries (and versions).

When PKEYs are explicitly required (e.g. for Platform MPI) for communication with VMs in the same InfiniBand tenant, ensure that PKEYS are probed for in the correct location appropriate for the interface.

Resolution

As a root cause, UCX has now fixed this issue. So, if you are using an MPI library that uses UCX, please make sure to build against and use the right UCX version.

As a recommended MPI implementation, HPC-X 2.7.4 for Azure includes this fix. An example of a 2.8.0 build downloadable from Nvidia Networking is available.

The Azure CentOS-HPC VM images on the Marketplace have also been updated with the above (and MOFED 5.2):

Offer Publisher Sku Urn Version

---------- ----------- -------- -------------------------------------------- ---------------------------

CentOS-HPC OpenLogic 7.6 OpenLogic:CentOS-HPC:7.6:7.6.2021022200 7.6.2021022200

CentOS-HPC OpenLogic 7_6gen2 OpenLogic:CentOS-HPC:7_6gen2:7.6.2021022201 7.6.2021022201

CentOS-HPC OpenLogic 7.7 OpenLogic:CentOS-HPC:7.7:7.7.2021022400 7.7.2021022400

CentOS-HPC OpenLogic 7_7-gen2 OpenLogic:CentOS-HPC:7_7-gen2:7.7.2021022401 7.7.2021022401

CentOS-HPC OpenLogic 7_8 OpenLogic:CentOS-HPC:7_8:7.8.2021020400 7.8.2021020400

CentOS-HPC OpenLogic 7_8-gen2 OpenLogic:CentOS-HPC:7_8-gen2:7.8.2021020401 7.8.2021020401

CentOS-HPC OpenLogic 8_1 OpenLogic:CentOS-HPC:8_1:8.1.2021020400 8.1.2021020400

CentOS-HPC OpenLogic 8_1-gen2 OpenLogic:CentOS-HPC:8_1-gen2:8.1.2021020401 8.1.2021020401

Note that all the CentOS-HPC images in the Marketplace continue to be useful for all other scenarios.

More details on the Azure CentOS-HPC VM images is available on the blog post and GitHub.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.