- Home

- Artificial Intelligence and Machine Learning

- AI - Azure AI services Blog

- Customize a translation to make sense in a specific context

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Translator is a cloud-based machine translation service you can use to translate text into 100+ languages with a few simple REST API calls. Out of the box the translator API supports many languages and features, but there are sometimes some scenarios where the translation doesn’t really fit the domain language, the solve this you can use the Custom Translator to train your own customized model. In this article we will dive into how the translator and the custom translator works, talk about when to use which, and point you to the right documentation to get started.

Let’s start with the basics

First you need to setup a Translator resource in Azure.

To get started with Azure you can get a started with a free Azure account here.

The easiest way to setup a Translator resource is using the Azure CLI or follow this link to create the resource in the portal.

az cognitiveservices account create --name Translator --resource-group Translator_RG --kind TextTranslation --sku S1 --location westeurope

For the pricing (sku) you have a few options. A free one, to get you started, a pay-as-you go one, here you only pay for what you use. Or if you know you are going to use the service a lot there are also pre-paid packages. More information on billing and costs can be found here.

Hello world with Text Translation

Text translation is a cloud-based REST API feature of the Translator service that uses neural machine translation technology to enable quick and accurate source-to-target text translation in real time across all supported languages.

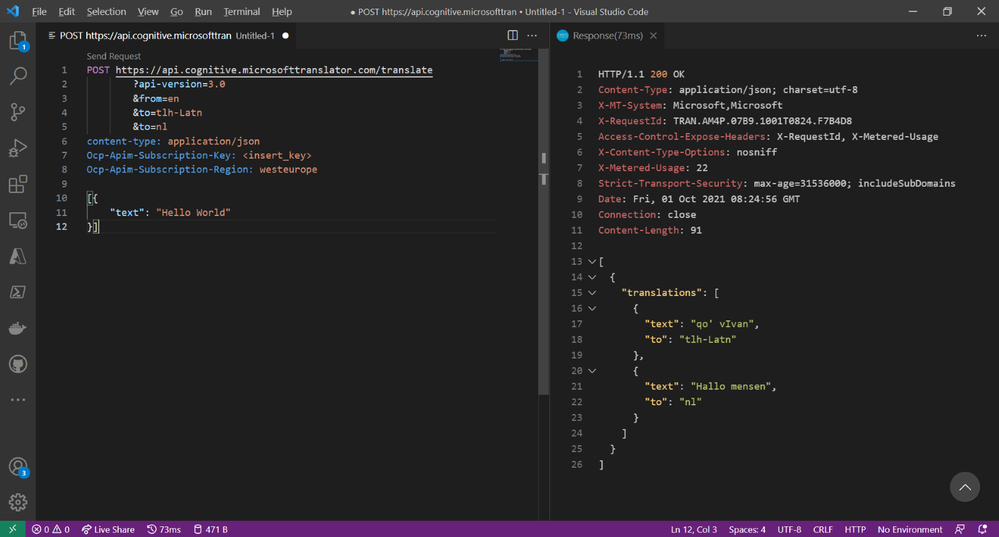

In this example we will use Visual Studio Code with the REST Client extension to call the Text Translation API.

Use the Azure CLI to retrieve the subscription keys:

az cognitiveservices account keys list --name Translator --resource-group Translator_RG

Next you need to POST the request to the translator API.

In the URL you specify the from and to languages and in the body you send the text that you want to translate. If you leave the “from” parameter, the api will detect the language automatically.

The array with the text in the JSON body can have at most 100 elements and the entire text included in the request cannot exceed 10,000 characters including spaces.

POST https://api.cognitive.microsofttranslator.com/translate

?api-version=3.0

&from=en

&to=tlh-Latn

&to=nl

content-type: application/json

Ocp-Apim-Subscription-Key: <insert_subscription_key>

Ocp-Apim-Subscription-Region: westeurope

[{

"text": "Hello World"

}]

What about bigger documents?

If your content exceeds the 10.000 character limit and you want to keep your original document structure you can use the “Document Translation” API.

Document Translation is a cloud-based feature of the Azure Translator service and is part of the Azure Cognitive Service family of REST APIs. In this overview, you’ll learn how the Document Translation API can be used to translate multiple and complex documents across all supported languages and dialects while preserving original document structure and data format.

To use this service, you need to upload the source document to a blob storage and provide a space for the target documents. When you have that settled you can send the request to the API.

Read more about this API here.

Customize the translation to make sense in a specific context

Now we have covered how to get started with out of the box translation, but in every language and context there might be a need to customize to enforce specific domain terminology.

Depending upon the context, you can use standard text translation API available customization tools or train your own domain specific custom engines.

First let’s take a look what can be done without needing to train your own custom model.

Profanity filtering

Normally the Translator service retains profanity that is present in the source in the translation. The degree of profanity and the context that makes words profane differ between cultures. As a result, the degree of profanity in the target language may be amplified or reduced.

If you want to avoid seeing profanity in the translation, even if profanity is present in the source text, use the profanity filtering option available in the Translate() method. This option allows you to choose whether you want to see profanity deleted, marked with appropriate tags, or take no action taken.

Read more here

Prevent translation of content

The Translator allows you to tag content so that it isn't translated. For example, you may want to tag code, a brand name, or a word/phrase that doesn't make sense when localized.

Dynamic dictionary

If you already know the translation you want to apply to a word, a phrase or a sentence, you can supply it as markup within the request.

I need more customization

If the above features are not sufficient, you can start with Custom Translator to train a specific model based on previously translated documents in your domain.

What is Custom Translator?

Custom Translator is a feature of the Microsoft Translator service, which enables Translator enterprises, app developers, and language service providers to build customized neural machine translation (NMT) systems. The customized translation systems seamlessly integrate into existing applications, workflows, and websites.

Custom Translator supports more than three dozen languages, and maps directly to the languages available for NMT. For a complete list, see Microsoft Translator Languages.

Highlights

Collaborate with others

Collaborate with your team by sharing your work with different people.

Read more

Zero downtime deployments of the model

Deploy a new version of the model without any downtime, using the in-build deployments slots.

Read more

Use a dictionary to build your models

If you don't have training data set, you can train a model with only dictionary data.

Read more

Build systems that knows your business terminology

Customize and build translation systems using parallel documents, that understand the terminologies used in your own business and industry.

Read more

Get started

To get started get your Translator endpoint setup (see top of this blog) and go the Custom Translator portal.

When you are in setup your workspace by selecting a region, entering your translator subscription key and give it a name.

A complete quick start can be found on Microsoft Docs.

Best practices

We will close of the blog with a few best practices and tips on how to get the best model using the Custom Translation Service.

Training material

|

What goes in |

What it does |

Rules to follow |

|

Tuning documents |

Train the NMT parameters. |

Be strict. Compose them to be optimally representative of what you are going to translate in the future. |

|

Test documents |

Calculate the BLEU score – just for you. |

|

|

Phrase dictionary |

Forces the given translation with a probability of 1. |

Be restrictive. Case-sensitive and safe to use only for compound nouns and named entities. Better to not use and let the system learn. |

|

Sentence dictionary |

Forces the given translation with a probability of 1. |

Case-insensitive and good for common in domain short sentences. |

|

Bilingual training documents |

Teaches the system how to translate. |

Be liberal. Any in-domain human translation is better than MT. Add and remove documents as you go and try to improve the score. |

BLEU Score

BLEU is the industry standard method for evaluating the “precision” or accuracy of the translation model. Though other methods of evaluation exist, Microsoft Translator relies BLEU method to report accuracy to Project Owners.

Read more

|

Suitable |

Unsuitable |

|

Prose |

Dictionaries/Glossaries (exceptions) |

|

5-18 words length |

Social media (target side) |

|

Correct spelling (target side) |

Poems/Literature |

|

In domain (be liberal) |

Fragments (OCR, ASR) |

|

> 20,000 sentences (~200K words) |

Subtitles (exceptions) |

|

Monolingual material: Industry |

|

|

TAUS, LDC, Consortia |

|

|

Start large, then specialize by removing |

|

|

Question |

More questions and answers |

|

What constitutes a domain? |

Documents of similar terminology, style and register. |

|

Should I make a big domain with everything, or smaller domains per product or project? |

Depends. |

|

On what? |

I don’t know. Try it out. |

|

What do you recommend? |

Throw all training data together, have specific tuning and test sets, and observe the delta between training results. If delta is < 1 BLEU point, it is not worth maintaining multiple systems. |

Auto or Manual Selection of Tuning and Test

Goal: Tuning and test sentences are optimally representative of what you are going to translate in the future.

|

Auto Selection |

Manual Selection |

|

+Convenient. +Good if you know that your training data is representative of what you are going to translate. +Easy to redo when you grow or shrink the domain. +Remains static over repeated training runs, until you request re-generation.

|

+Fine tune to your future needs. +Take more freedom in composing your training data. +More data – better domain coverage. +Remains static over repeated training runs.

|

When you submit documents to be used for training a custom system, the documents undergo a series of processing and filtering steps to prepare for training. These steps are explained here. The knowledge of the filtering may help you understand the sentence count displayed in custom translator as well as the steps you may take yourself to prepare the documents for training with Custom Translator.

Sentence alignment

If your document isn't in XLIFF, TMX, or ALIGN format, Custom Translator aligns the sentences of your source and target documents to each other, sentence by sentence. Translator doesn't perform document alignment – it follows your naming of the documents to find the matching document of the other language. Within the document, Custom Translator tries to find the corresponding sentence in the other language. It uses document markup like embedded HTML tags to help with the alignment.

If you see a large discrepancy between the number of sentences in the source and target side documents, your document may not have been parallel in the first place, or for other reasons couldn't be aligned. The document pairs with a large difference (>10%) of sentences on each side warrant a second look to make sure they're indeed parallel. Custom Translator shows a warning next to the document if the sentence count differs suspiciously.

Deduplication

Custom Translator removes the sentences that are present in test and tuning documents from training data. The removal happens dynamically inside of the training run, not in the data processing step. Custom Translator also removes duplicate sentences and reports the sentence count to you in the project overview before such removal.

Length filter

- Remove sentences with only one word on either side.

- Remove sentences with more than 100 words on either side. Chinese, Japanese, Korean are exempt.

- Remove sentences with fewer than 3 characters. Chinese, Japanese, Korean are exempt.

- Remove sentences with more than 2000 characters for Chinese, Japanese, Korean.

- Remove sentences with less than 1% alpha characters.

- Remove dictionary entries containing more than 50 words

White space

Replace any sequence of white-space characters including tabs and CR/LF sequences with a single space character.

Remove leading or trailing space in the sentence.

Sentence end punctuation

Replace multiple sentence end punctuation characters with a single instance. Japanese character normalization.

Convert full width letters and digits to half-width characters.

Unescaped XML tags

Filtering transforms unescaped tags into escaped tags:

< becomes &lt;

> becomes &gt;

& becomes &amp;

Invalid characters

Custom Translator removes sentences that contain Unicode character U+FFFD. The character U+FFFD indicates a failed encoding conversion.

Before uploading data

Remove sentences with invalid encoding.

Remove Unicode control characters.

Bash script example:

#!/bin/bash # This command would run recursively in the current directory to

# remove Unicode control chars, e.g.,

, ð

find . -type f -exec sed -i 's/&#x.*[[:alnum:]].*;//g' {} +

##Validate text replacement grep '&#x.*[[:alnum:]].*;' -r * | awk -F: {'print $1'} | sort -n | uniq -c

More resources:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.