- Home

- Artificial Intelligence and Machine Learning

- AI - Azure AI services Blog

- Build predictive maintenance, conversational user interface and powerful analytics at the edge

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

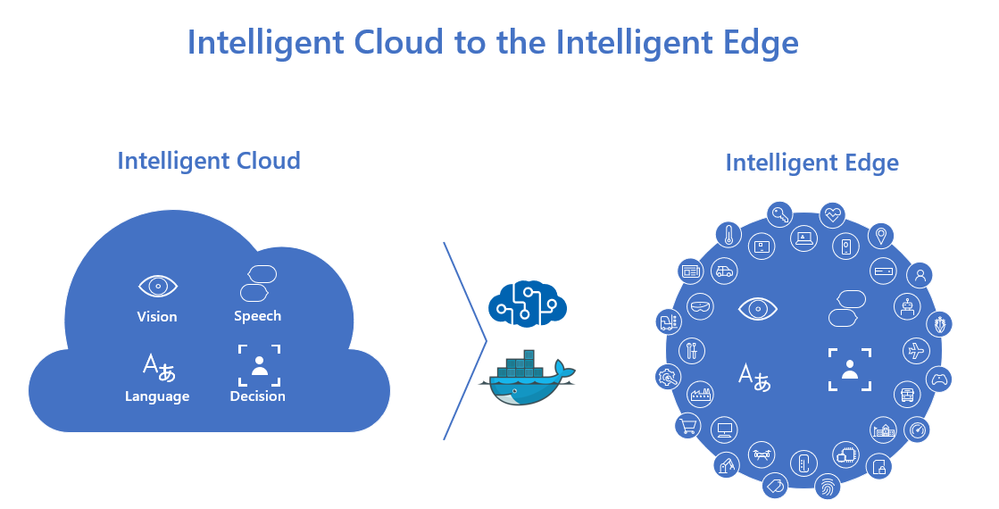

With Azure Cognitive Services in containers businesses across industries have unlocked new productivity gains and insights. Organizations ranging from manufacturing, legal to financial services have transformed their processes and customer experiences as a result. Azure is the only cloud provider supporting customers giving full flexibility to run AI in their own terms (on-prem, cloud or at the edge).

In general, developers need to have right data science skills to build, train and run models, but Cognitive Services brings AI within reach of every developer with pre-built and customizable AI that can be embedded into apps with the programming languages of your own choice. With AI container support, we took one step ahead empowering developers to deploy hybrid solutions. This solves current challenges with data control, privacy, and network intensive workloads.

The goal of this post is to show several examples of how enterprise applications leverage containers to solve AI needs at the edge. Learn more about how customers built solutions using Cognitive Services Containers

Use case #1 – Anomaly detection in factory equipment using predictive maintenance solution

Tibco - TIBCO, a strategic partner of Microsoft, has a market offering that incorporates two containerized Cognitive Services into their Anomaly Detection solution.

TIBCO unlocks the potential of data assets for making faster, smarter decisions with their Connected Intelligence Platform. The platform allows you to seamlessly connect to any application or data source; unify data for greater access, trust, and control; and confidently predict outcomes at scale in real-time.

The TIBCO anomaly detection solution includes Azure Cognitive Services container deployment with text mining and root cause analysis. Anomaly detection and analysis provides value across nearly every industry including energy, financial fraud and risk, algorithmic insurance, connected vehicles, healthcare and insurance claims, and manufacturing fault detection and yield optimization.

This blog focuses on manufacturing, identifying anomalies for asset management. This specific example uses machine learning techniques to detect anomalies, understand root cause from related text data, and alert case managers when sensor readings are deviating from expected patterns. This enables operators to implement condition-based maintenance interventions before equipment failure, and to prevent costly manufacturing process shutdown.

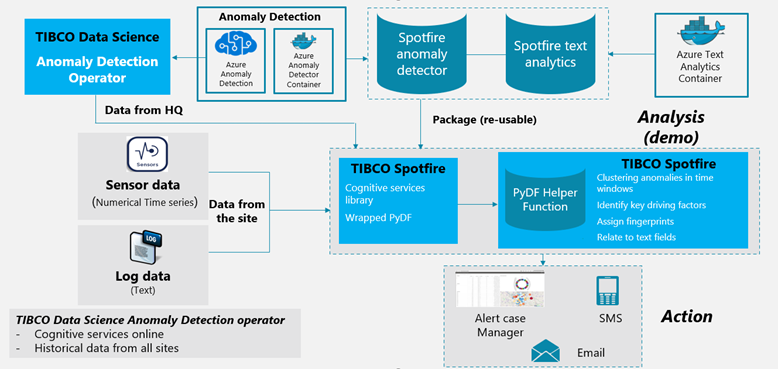

Reference Architecture:

Solution overview:

Manufacturing, energy, mining and power plant customers use TIBCO Data Science to detect anomalies on historical data from various facilities at scale. This TIBCO Data Science workflow produces a portable analytics model object that the maintenance engineer can call remotely, and also bring to the engineering site and invoke using TIBCO Spotfire locally.

TIBCO Spotfire calls the Azure Containerized Cognitive services using a Spotfire data function via a python API. As the maintenance engineer detects anomalies onsite, Spotfire’s brush-linked visual analytics and data science tooling runs a root cause anomaly analysis, After the anomalies are detected, root cause factors are identified for a specific time window as part of the analysis. These analyses are combined with results from the Text Analytics containerized service using key phrase extraction to determine the recommended actions to be taken.

Let’s see how this solution built in few steps:

Phase 1 - Anomaly Detection on historical data: Here is the TIBCO Data Science platform which is used for detecting anomalies across all the manufacturing sites.

What you see in the image below is power plant data. The workflow consists of multiple steps including data pre-processing, transformation of time-series data, filtering and ultimately calling the MS Azure services from a TIBCO Data Science operator designed to invoke the services.

Phase 2 – Detecting Anomalies at remote site: Once the maintenance engineer is at a remote location that may not be connected to the internet, the first step is to perform anomaly detection analysis using the Spotfire equipment maintenance dashboard by selecting different features as shown below.

The user selects a response metric and granularity, and the anomalies are detected based on historical data collected at the particular site. As shown in the image below, the upper section shows the original data readings along with the expected values provided from the containerized service, and the bottom section shows the difference between the original and expected values over time. The red markers are anomalies detected by the Anomaly Detection routine.

Phase 3 – Performing root cause analysis: The site engineer investigates anomalies from a certain time window to perform a root cause analysis. On the image below (bottom left portion), key driving factors indicate what factors are contributing to the anomalies in production per minute for the time window selected by an engineer using the TIBCO Spotfire analytics application.

At this point, the site engineer digs deeper to understand the maintenance action items performed prior to these anomalies occurring by getting insights from log data using key phrase extraction from the Text Analytics container as indicated in the image below in the top section (Get Insights).

From here, TIBCO Spotfire generates a recommendation for next steps. In the example shown, the ‘BaroPressure’ sensor was restarted 5 times vs. being recommended for replacement just once. So, the equipment has to be replaced to avoid any further shutdowns.

In summary, initially detecting anomalies in power plant data across all sites on historical data using TIBCO Data Science. This produced a portable analytics model object that the maintenance engineer can take to the remote site. At the site, all the recommended actions by leveraging containerized cognitive services from Azure combined with decision intelligence embedded in TIBCO Spotfire using root cause analysis.

This use case is just an illustrative example and one of the many ways how customer’s use TIBCO’s analytics and data science capabilities to solve some of the most complex business problems such as pricing and promotion optimization, supply chain analytics, fraud detection, yield enhancement, and real-time process control.

To learn more about TIBCO’s anomaly detection solution, visit Anomaly Detection at TIBCO.

Use case #2 – Organizing unstructured data and surface insight in Power BI dashboard

Wilson Allen is a systems integrator with deep analytic experience serving the legal. Given the confidentiality of the work involved, the data must stay local. Law firms use Wilson Allen’s Proforma Tracker to manage their pre-bill processes. Wilson is using cognitive services to recognize text, optimize legal templates and process them for billing, translate and ensure they are in compliance with regulatory laws as well as enabling editing via hand writing and capturing this digitally.

Architecture-wise, Wilson Allen is using Azure’s AI in the form of containerized Cognitive Services to bring form-based data into firm’s data ecosystem and to create meta data from text-based data, using ML techniques to establish patterns, causality relationships, and to render it all in a common data model without the traditional data warehouse visualized through Power BI reporting.

Using Form Recognizer, they are also extracting historic sets of HR PDF forms (intake, change, termination) to create a database for master data and analytic purposes. Additionally, they are using Sentiment Analysis for marrying structured and unstructured client feedback data then surfaced in Power BI.

Containers used: Form Recognizer, Text Analytics (Sentiment Analysis)

Use case #3 – Build conversational user interface to help retailers manage inventory better, help bankers improve support experience, provide healthcare customer with better guidance in manage appointment and track efficiency.

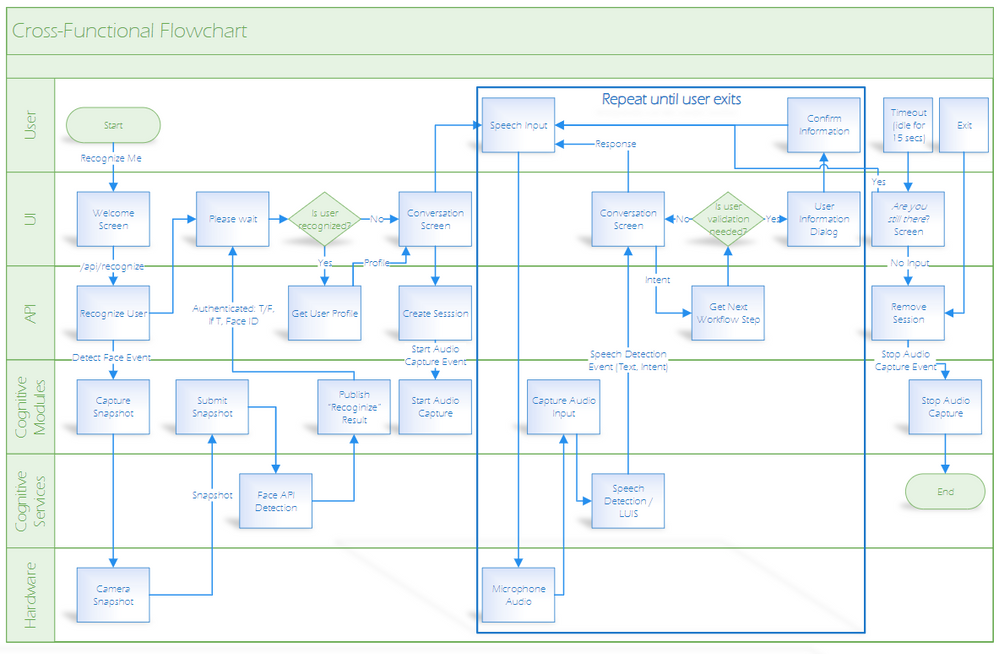

Insight - Insight’s Kiosk Framework leverages the Cognitive Services containers to create a functional experience for the customer with a minimal connectivity requirement. Using the Azure IoT Edge runtime and IoT hub, the framework can be managed remotely and securely allowing the customer to change the “personality” of the kiosk remotely based on the business requirements and constraints around image capture, responses and content. It also allows the customer to manage the deployment and management of this framework at scale across locations.

Containers used: Face Recognition, Speech to Text, Language Understanding

Get Started...! Learn more and deploy AI at the edge with Cognitive Services

Deploying your first container is about a 2-minute read. You basically create a resource at Azure portal, download image, run container with environmental variables. Here's a guide help you get started on running containers.

Best Practices

Let’s look at some best practices that might be helpful for you to run containers:

Container security

Security should be a primary focus whenever you're developing applications. The importance of security is a metric for success. When you're architecting a software solution that includes Cognitive Services containers, it's vital to understand the limitations and capabilities available to you. For more information about network security, see Configure Azure Cognitive Services virtual networks.

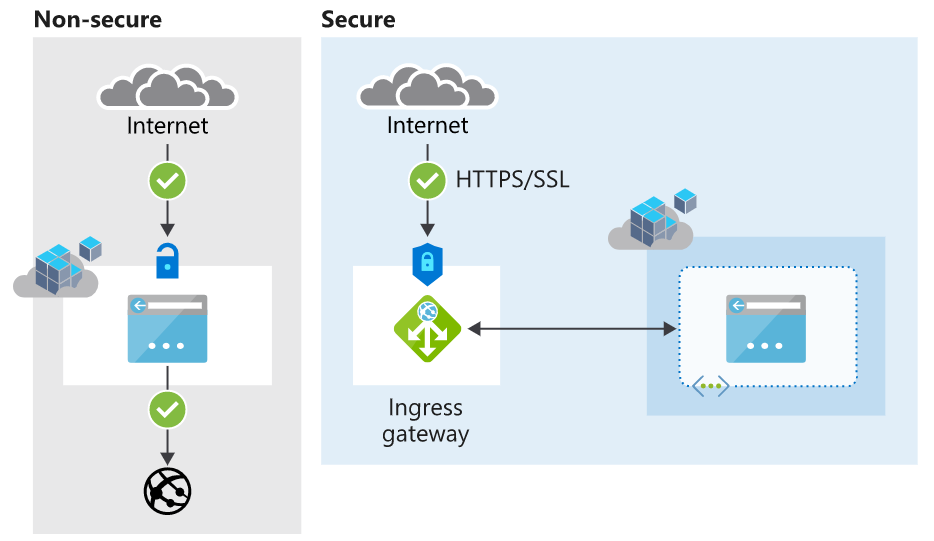

The diagram below illustrates the default and non-secure approach:

As an alternative and secure approach, consumers of Cognitive Services containers could augment a container with a front-facing component, keeping the container endpoint private. Let's consider a scenario where we use Istio as an ingress gateway. Istio supports HTTPS/TLS and client-certificate authentication. In this scenario, the Istio front end exposes the container access, presenting the client certificate that is whitelisted beforehand with Istio.

Nginx is another popular choice in the same category. Both Istio and Nginx act as a service mesh and offer additional features including things like load-balancing, routing, and rate-control.

Container networking

Cognitive Services containers are required to submit metering information for billing purposes. Failure to allow list various network channels that the Cognitive Services containers rely on will prevent the container from working.

Allow list Cognitive Services domains and ports

The host should allow list port 443 and the following domains:

- *.cognitive.microsoft.com

- *.cognitiveservices.azure.com

Disable deep packet inspection

Deep packet inspection (DPI) is a type of data processing that inspects in detail the data being sent over a computer network, and usually takes action by blocking, re-routing, or logging it accordingly.

Disable DPI on the secure channels that the Cognitive Services containers create to Microsoft servers. Failure to do so will prevent the container from functioning correctly.

Get started today by going to Azure Cognitive Services to build intelligent applications that span Azure and the edge. For more information, please refer to container documentation page.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.