- Home

- Artificial Intelligence and Machine Learning

- AI - Azure AI services Blog

- New Product Features for Live Video Analytics on IoT Edge

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We are excited to announce a public preview refresh of the Live Video Analytics (LVA) platform, adding a new set of capabilities that will help in building complex workflows that capture and process video with real-time analytics. The platform offers the capability of capturing, recording, and analyzing live video and publishing the results (which could be video and/or video analytics) to Azure services in the cloud and/or the edge.

The LVA platform is available as an Azure IoT Edge module via the Azure marketplace. The module is referred to as Live Video Analytics on IoT Edge and is built to run on a Linux x86-64 and arm64 edge devices in your business location.

This refresh release includes the following:

- Support for AI composability on live video – We added support for using more than one HTTP extension processor and gRPC extension processor per LVA media graph in sequential or parallel configurations.

-

Support for passing custom string to the inference server and carry out custom operations in the server – LVA can now pass an optional string to the gRPC inferencing server to take actions as defined by the inference solution provider.

-

Improved Developer productivity – Customers now can build LVA graphs via a VS Code extension which has a graphical visual representation to help getting started quickly with the media graph concepts. We have also published C# and Python SDK sample codes that developers can use as guides to build advanced LVA solutions.

- Manage storage space on the edge – LVA now comes with added support for disk management on edge via one of its sink nodes

- Improved observability for developers – You can now collect Live Video Analytics module metrics such as total number of active graphs per topology, number of dropped data, etc. in the Prometheus format.

- Ability to filter output selection – LVA graph now provides customers the ability to filter audio and video via configurable properties.

- Simplifying frame rate management experience – Frame rate management is now available within the graph extension processor nodes itself.

Details and Examples

We will now describe these exciting changes in more detail.

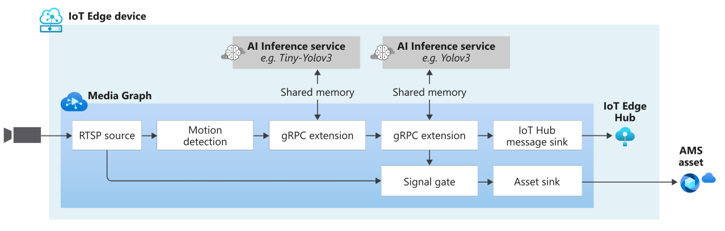

AI Composition

LVA now supports using more than one media graph extension processor within a topology. You can now chain extension processors with or without dependencies in use cases where a cascade of AI inference servers from disparate sources is needed to serve the user’s needs. An example of this is the use of a heavy inference model for object detection on sparse frames and having a lighter tracker downstream that operates on all frames and interpolates data from the upstream detector. These different extensions may operate at different resolutions, including a higher resolution on a downstream node.

Below is an example of how you can use multiple AI models sequentially.

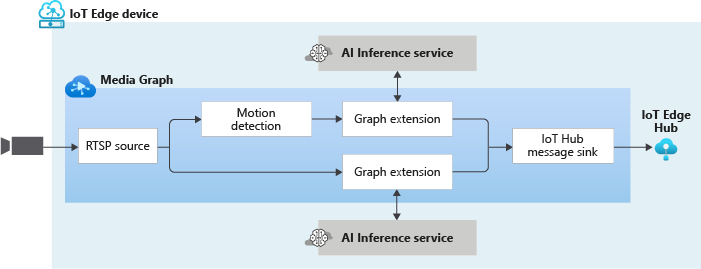

Similarly, you can have inference servers run in parallel as well. This allows customers to stream a video stream to multiple inference servers in parallel reducing bandwidth requirements on the edge. The graph can look something like this:

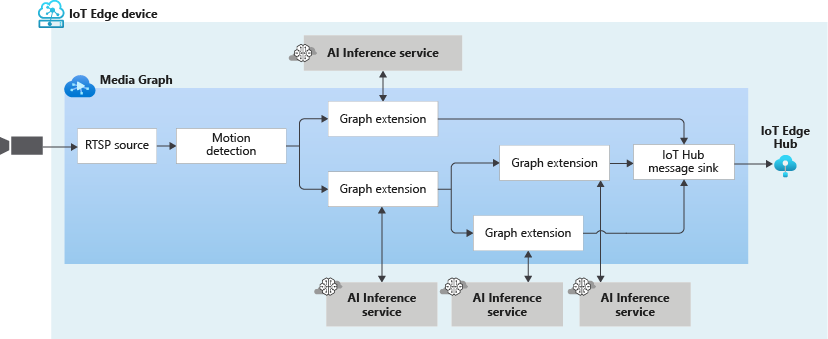

You can take it a step further and build a complex graph such as this:

You can use as many extension processors as you need if the hardware(s) you are using can support it.

Passing data to your Inference Server

We have added a new property called extensionConfiguration in our gRPC data contract which is an optional string that can be used to pass any data to the inference server and you as an Inference solution provider can define how the inference server uses that data.

One use case of this property is to simplify the calling of multiple AI models that you are using with LVA. Let’s say that you want to use 2 or more inferencing models with LVA. Currently, in our quickstarts, we show you how to deploy an extension with the name: “lvaExtension”. Using the steps there, you would have developed and deployed 2 different modules ensuring that” both are listening on different ports.

For example, one container with lvaExtension1 name listening at port i.e., 44000, other container with name lvaExtension2 name listening at port 44001. In your LVA topology, you would then instantiate two graphs with different inference URLs like below and use multiple AI models:

First instance: inference server URL = tcp://lvaextension:44001

Second instance: inference server URL = tcp://lvaextension:44002

Now, instead of configuring multiple network ports to connect to different AI models, as an extension provider, you can wrap all your AI models in a single inference server. You will then have your inference server invoke AI models based on some string that gets passed to it when called from LVA. When you instantiate the graphs, for each instance assign different strings to the extensionConfiguration property. These strings will be one of the strings you defined in the inferencing server to invoke the models. LVA platform during execution will pass the string to the inferencing server, which will then invoke the AI model of choice.

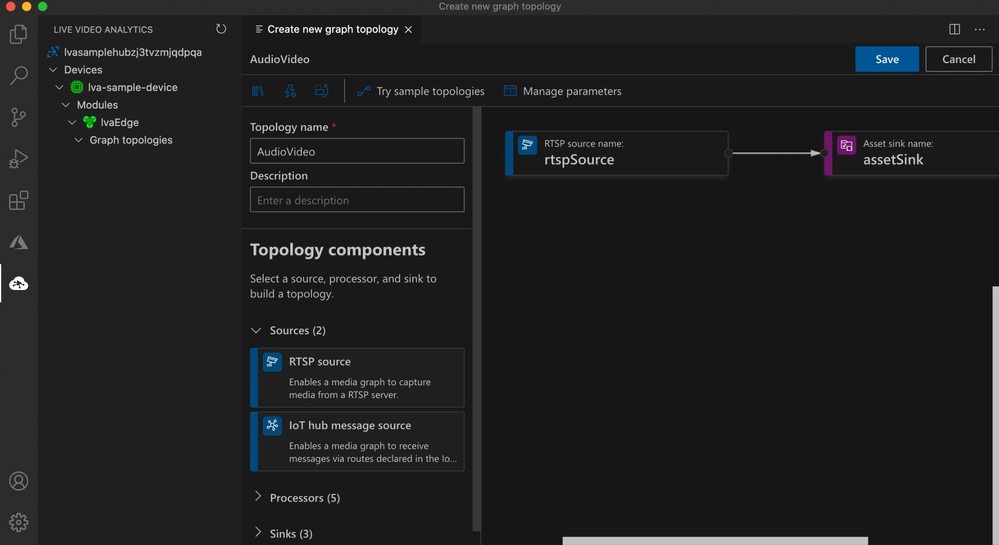

Improved Developer productivity

In December, we released a VSCode extension to help you manage LVA media graphs. This extension works with LVA 2.0 module and offers editing and managing media graphs with a very sleek and an easy-to-use graphical interface. This extension comes with a set of sample topologies developed for you to try out and get started.

Along with the VS Code extension, we have also released the prerelease versions of C# and Python SDK client libraries that you can use to:

- Simplify interactions with the Microsoft Azure IoT SDKs

- Programmatically construct media graph topologies and instances as simply as:

static async Task Main(string[] args)

{

try

{

// NOTE: Read app configuration

IConfigurationRoot appSettings = new ConfigurationBuilder()

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("appsettings.json")

.Build();

// NOTE: Configure the IoT Hub Service client

deviceId = appSettings["deviceId"];

moduleId = appSettings["moduleId"];

// NOTE: Get the camera's RTSP parameters.

var rtspUrl = appSettings["rtspUrl"];

var rtspUserName = appSettings["rtspUserName"];

var rtspPassword = appSettings["rtspPassword"];

// NOTE: create the ILiveVideoAnalyticsEdgeClient (this is the SDKs client that bridges your application to LVAEdge module)

deviceId = appSettings["deviceId"];

moduleId = appSettings["moduleId"];

edgeClient = ServiceClient.CreateFromConnectionString(appSettings["IoThubConnectionString"]);

// NOTE: build GraphTopology based on the selected Topology

var topology = BuildTopology();

// NOTE: build GraphInstance based on the selected Topology

var instance = BuildInstance(topology, rtspUrl, rtspUserName, rtspPassword);

// NOTE: Run the orchestration steps (GraphTopologySet, GraphInstanceSet, GraphInstanceActivate, GraphInstanceDeactivate, GraphInstanceDelete, GraphTopologyDelete)

var steps = Orchestrate(topology, instance);

}

catch(Exception ex)

{

await PrintMessage(ex.ToString(), ConsoleColor.Red);

}

}

To help you get started with LVA using these client libraries, please check out our C# and Python GitHub repositories.

Disk space management

Now, you can now specify how much storage space LVA can use to store processed media when using sink nodes. As a part of the sink node, you can specify the disk space in MiB (mebibyte) that you wish to allocate for LVA to use. Once the specified threshold is reached, LVA will start removing the oldest media from the storage and create space for new media as they come from upstream.

Live Video Analytics module metrics in the Prometheus format

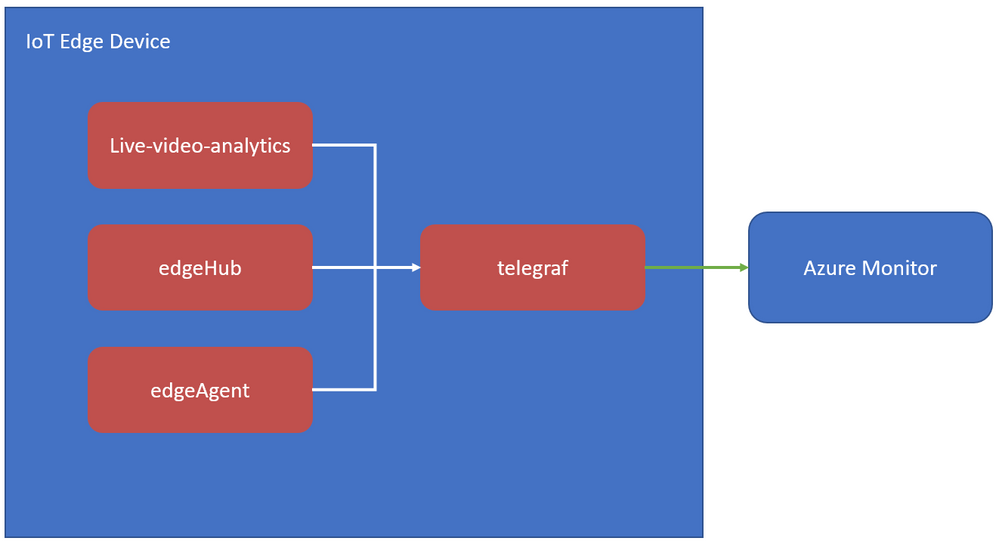

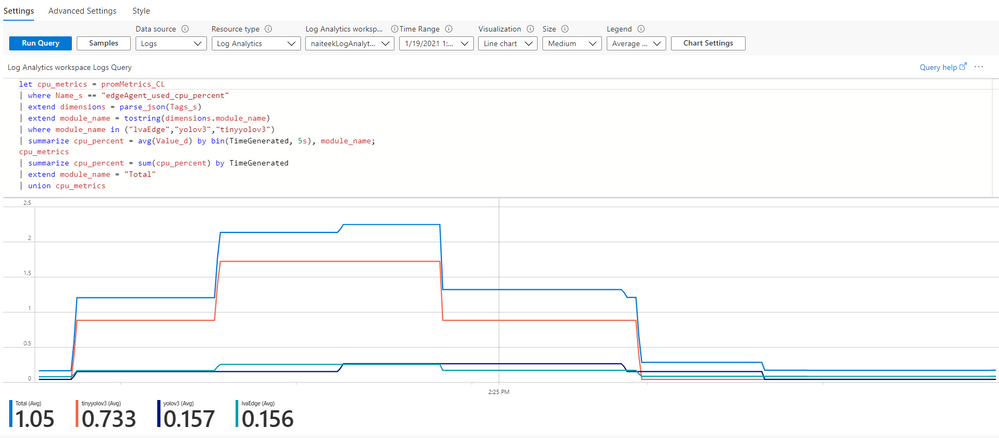

With this release, Telegraf can be used to send LVA Edge module metrics to Azure Monitor. From there, the metrics may be directed to Log Analytics or an Event Hub.

You can learn more about how collect LVA metrics via Telegraf in our documentation. Using Prometheus along with Log Analytics, you can generate and monitor metrics such as used CPUPercent, MemoryUsedPercent, etc. Using Kusto query language, you can write queries as below and get CPU percentage used by the IoT edge modules.

Ability to filter output selection

LVA now enables you to select passing only selected media types between nodes. With this release of LVA, you can now pass audio and video files from the RTSP cameras.

In addition, you can now specify in the LVA processor nodes, if “audio”, “video” or both media elements need to be passed downstream for further processing.

Get Started Today!

In closing, we’d like to thank everyone who is already participating in the Live Video Analytics on IoT Edge public preview. We appreciate your ongoing feedback to our engineering team as we work together to fuel your success with video analytics both in the cloud and on the edge. For those of you who are new to our technology, we’d encourage you to get started today with these helpful resources:

- Watch the Live Video Analytics intro video

- Find more information on the product details page

- Watch the Live Video Analytics demo

- Try the new Live Video Analytics features today with an Azure free trial account

- Register on the Media Services Tech Community and hear directly from the Engineering team on upcoming new features, to provide feedback and discuss roadmap requests

- Download Live Video Analytics on IoT Edge from the Azure Marketplace

- Get started quickly with our C# and Python code samples

- Review our product documentation

- Search the GitHub repo for LVA open-source project

And finally the LVA product team is keen to hear about your experiences with LVA. Please feel free to contact us at amshelp@microsoft.com to ask questions and provide feedback including what future scenarios you would like to see us focusing on.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.