- Home

- Windows Server

- Networking Blog

- Synthetic Accelerations in a Nutshell – Windows Server 2012

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Hi folks,

Dan Cuomo here to talk about the state of synthetic accelerations (sometimes synonymous with VMQ) on Windows Server. This is the first in a series of posts covering synthetic accelerations on 2012, 2012 R2, 2016, and 2019. We recommend that you read through the progression of posts (once available) to make sure you have the proper information for each operating system you’re managing.

Some things never change. They are what they are. For-ev-er.

The sky will always be blue (unless you’re in Seattle), there will always be 60 seconds in every minute (unless there’s a leap second)…ok, well some things change. And due to the literary rule of three, technology changes too.

Virtual Machine Queues (VMQ) is no exception. This technology was first introduced in Windows Server 2012 as a means to spread the processing of received network packets destined for a virtual NIC across different cores. Once 10 GbE adapters became prevalent, the network processing quickly consumed more than any one single core could handle. The first thing most folks do after getting their shiny new 10 GbE adapter would be to try and copy something over the network to see the blazing fast speed. All looked great, 9+ Gbps! So, you add a couple of VMs and repeat the experiment. Much to your chagrin you get an underwhelming 3 – 5 Gbps. What happened? Hyper-V must have a regression!?

No, it’s just that you’re now pegging a single CPU core. **Enter VMQ**

I’ve seen many a blog about the correct way to poke it, prod it, and tweak VMQ into semi-willing submission. Windows Server releases have come and gone and as they have the best practices for VMQ have changed considerably. So, in this blog series we’ll try to dispel the myths and give the details you need to configure this properly across each of the in-market versions starting with today’s post on Windows Server 2012. We’ll also talk about some of the key changes in 2012 R2, 2016, and 2019 so you can understand how to configure your system if you’re running on each of these operating systems.

But first, some background…

Background

First, you may have noticed that this blog is not titled VMQ Deep Dive 1, 2, or 3 – These previously published articles were awesome resources in their time (in fact, I’m borrowing some of the original content there for these articles). But time marches on and (coinciding with our migration to the new blogging site) there’s a need to set proper context for the available operating systems of the day.

VMQ used to be an independent feature in the operating system, and as such, it became synonymous with network acceleration on Hyper-V. That’s no longer the case as you’ll see in the sections on Windows Server 2016 and 2019. In fact, we now call the VMQ described in this article “Legacy VMQ” (as compared to vPort-based VMQ which will be explained in the 2016 and 2019 articles).

Credit where credit is due. The original articles that are being replaced/updated here are:

- Gabe Silva - VMQ Deep Dive, 1 of 3

- Gabe Silva - VMQ Deep Dive, 2 of 3

- Gabe Silva - VMQ Deep Dive, 3 of 3

- Marco Cancillo - Virtual Machine Queue (VMQ) CPU assignment tips and tricks

In addition, this blog is so titled based on the data path that packets travel through the system to reach their intended destination, a virtual NIC in either the host or a guest.

So, what do we mean by the “synthetic” data path? This means traffic that passes through the virtual switch. At a high-level, the data path looks like this:

Packets come off the wire, into the NIC and miniport from the NIC vendor. The virtual switch processes the packets and sends the data to ride along the vmBus before reaching the vmNIC and being processed by the network stack in the VM. This is the most common data path for network communication in Hyper-V and, as you can see, the packets are passing through the virtual switch which requires use of the physical CPU cores.

Note: This does not apply to RDMA, or other Direct Memory Access technology such as SR-IOV.

Now let’s zoom into the NIC architecture and interoperability with the 2012 OS family.

NIC Architecture in 2012

Every packet flow that enters a NIC is assigned a hardware queue in that NIC whether you’re using Hyper-V or not. How the adapter filters the packets is dependent on whether you have a virtual switch attached to that adapter.

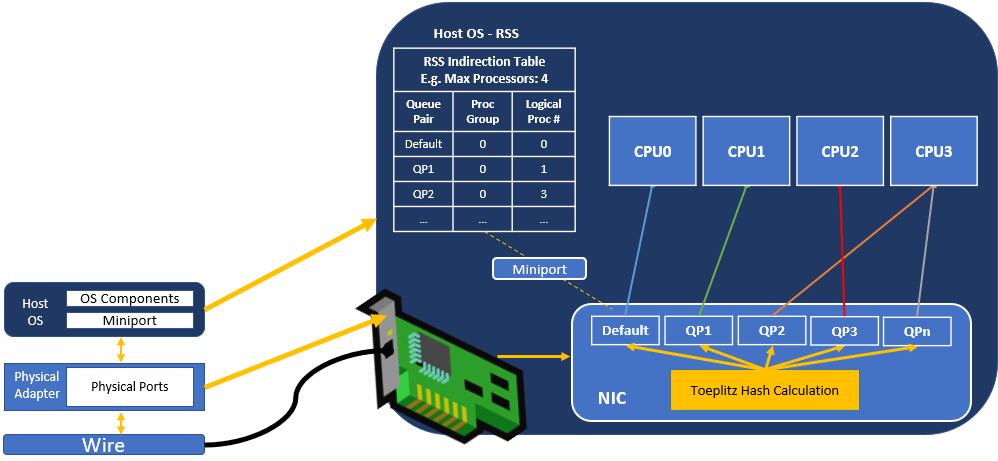

For example, if you have an adapter without Hyper-V attached, the NIC calculates a hash to distribute the packets to different queues in the NIC. The miniport driver can interrupt or DPC to CPU cores to have a CPU process the incoming packets. This is how RSS works.

Note: Each queue is a pair of queues; one send, one receive. The queues below are denoted as “QP” or queue pair.

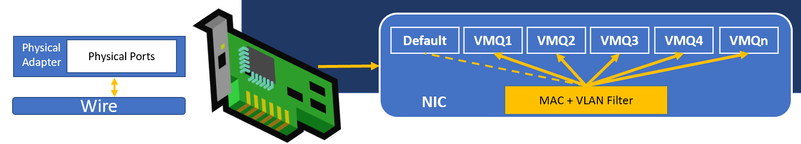

However, when you have a virtual switch attached, the NIC creates a filter based on each vmNIC’s MAC and VLAN combination on the Hyper-V Virtual Switch (vSwitch). When a packet arrives in this context, a filter is applied that checks if the packet matches one of the already seen MAC and VLAN combinations; if there is a match, the packet is sent to the corresponding queue that has been assigned to that packet filter. If there is no match, it is sent to the default queue – There is always a default queue.

Disabling RSS

In this way, RSS was functionally disabled (for that adapter) the moment you attached a vSwitch. Regardless of what you see in Get-NetAdapterRSS, RSS is not in use with this version of the operating system. Due to some unfortunate support challenges, particularly with NIC drivers during the early days of VMQ, there was a common recommendation of manually disabling RSS when VMQ is enabled on the system.

We do not support disabling RSS for any operating system for a couple of reasons:

- Disabling RSS disables it for every adapter. Any adapter not attached to the virtual switch can still use RSS and as such would be negatively impacted by this change.

- As you’ll see in the future articles, there is a budding friendship between these features (RSS and VMQ). They’ll soon become fast friends…like peanut butter and jelly…

Important: Disabling RSS is not a supported configuration on Windows. RSS can be temporarily disabled by Microsoft Support for troubleshooting whether there is an issue with the feature. RSS should not be permanently disabled, which would leave Windows in an unsupported state.

Available Processors

Virtual NICs on Windows Server 2012 will, by default, use one, and only one core to process all the network traffic that coming into the system. That core is CPU0.

This meant that without any changes from you, with a VMQ certified NIC and driver, your overall system throughput would still be limited to what CPU0 could attain. To remedy this, you had to tell Windows that it was OK for these queues to send their packets to other CPU cores as shown in the next couple of sections.

Configuration of Legacy VMQ

Enabling / Disabling VMQ

To enable or disable VMQ use the Enable-NetAdapterVmq and Disable-NetAdapterVmq PowerShell cmdlets on the host adapters.

Note: Depending on your specific adapter, you may also need to review Get-NetAdapterAdvancedProperty and make sure the adapter is showing link up.

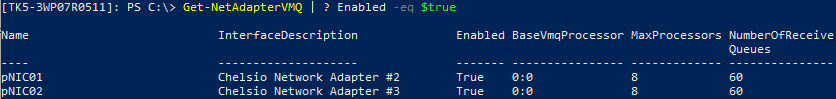

Next, identify the number of VMQs available. The number of queues available on your NIC can be found by running the PowerShell cmdlet Get‑NetAdapterVmq and looking at the column NumberOfReceiveQueues as shown here.

If your adapters are teamed, the total number of queues available to the devices attached to the team may be either the sum-of-queues from all adapters in the team, or could be limited to the min-of-queues available on any one single adapter. This depends on the teaming options selected when the team was created. For more information, please see NIC Teaming documentation.

For the purposes of this guide, we’ll use the recommended sum-of-queues mechanism which requires a team to use switch-independent teaming mode and either Hyper-V port or Dynamic Load balancing.

Note: In Windows Server 2012 and 2012 R2, only LBFO teaming was available. We do not recommend using LBFO on Windows Server 2016 and 2019 unless you are on a native host (without Hyper-V).

Engaging additional CPU Cores

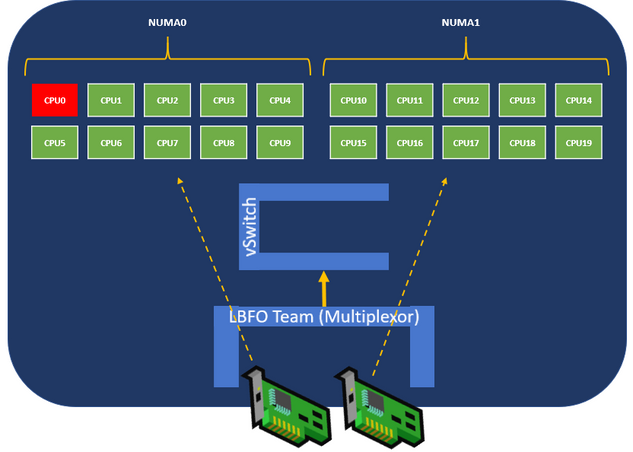

As mentioned earlier, by default Windows Server 2012 engaged only CPU0 on NUMA0. To engage and toggle the CPU cores assignments used by VMQ use the Set-NetAdapterVmq command. This cmdlet uses similar parameters as Set-NetAdapterRSS. There are many possible configurations available (for more information, please see the documentation for Set-NetAdapterVMQ), however we’ll use a simple example to show how to use the cmdlets.

Consider a server that has the following hardware and software configuration

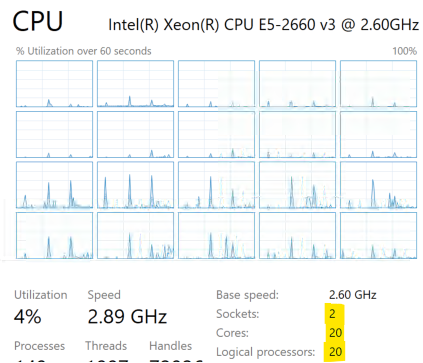

- 2 CPUs with 10 cores and 10 logical processors; Hyperthreading is disable

Note: Because hyperthreaded CPUs on the same core processor share the same execution engine, the effect is not the same as having multiple core processors. For this reason, RSS and VMQ do not use hyperthreaded processors.

- 2 physical NICs

- Names: pNIC01, pNIC02

- 60 VMQs (NumberOfReceiveQueues) per interface

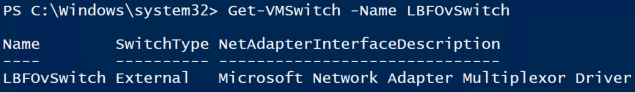

- NICs are connected to an LBFO team intuitively named: LBFO

- Teaming Mode: Switch Independent

- LoadBalancingAlgorithm: Hyper-V Port

Note: In Windows Server 2016 and 2019, we no longer recommend using LBFO with the virtual switch

- A virtual switch named LBFOvSwitch is attached to the LBFO team

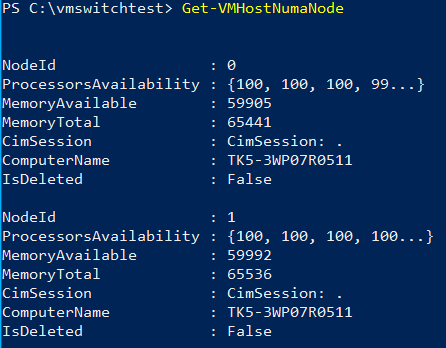

Before we begin modifying anything, let’s check to see if there are any differences in the NUMA configuration of the adapters. First let’s see if we have multiple NUMA nodes.

And how the pNICs are distributed across those NUMA nodes

As you can see above, both pNICs are on NumaNode 1. If your adapters have access to different NUMA Nodes, we recommend assigning the NIC to processors on the that NUMA Node for improved performance.

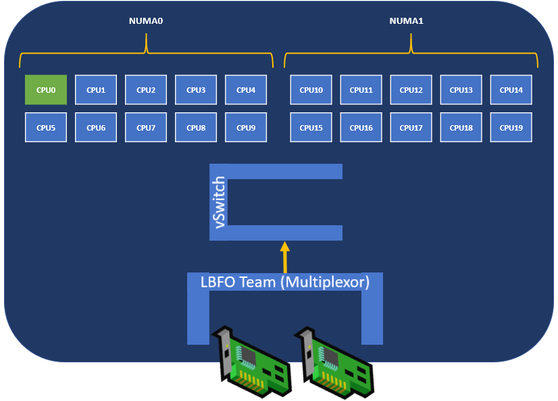

Here’s the basic structure. As you can see, the virtual switch can currently “see” only one processor – CPU0

If you run Get-NetAdapterVmq on the system (now teamed), by default, it’ll look like this:

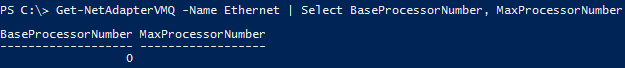

Specifically, the MaxProcessorNumber is not defined.

There are a couple of problems with this configuration:

- CPU0 is engaged – CPU0 is typically the most overburdened CPU on the system and so a virtual machine whose VMQ lands on this processor will compete with all other processing on that system. It’s best if we could avoid this processor.

- Only CPU0 is engaged – Currently all VMs are using this processor to service received network traffic. Since we have a bunch of other cores, we should use them so the VMs can get better performance.

- MaxProcessors is configured to 8 and there are 19 processors on the system (excluding CPU0); 10 per NUMA. This means that the adapter will only assign VMQs to 8 of the available processors.

- Overlapping of processors is not ideal - Given we’re limited to a single VMQ per virtual NIC, and VMQs cannot be reassigned to a new processor if under load, we need to minimize the chances of different adapters assigning VMQs to the same processor.

Note: If you’re in min-of-queues mode (e.g. using LACP or address hash), the processors assigned to the NICs in the team must be the same; you’ll need to run the same commands on each pNIC and cannot split the adapters in the manner shown below. We do not recommend running in min-of-queues mode. If you are misconfigured based on the defined queue mode, you will see event 106. Please see this KB article for more information.

To remedy the previously outlined issues, let’s configure the following VMQ to processor layout like this:

If you have Hyperthreading enabled, you’d visualize it like this:

![Annotation 2019-04-17 100721.jpg [EDIT] Picture updated to include all applicable processors](https://techcommunity.microsoft.com/t5/image/serverpage/image-id/109353i289FE44E5A2DDE4D/image-size/large?v=v2&px=999)

Generally, we’d recommend assigning enough processors to max out the adapter’s available throughput. For example, if you have a 10 GbE NIC, you’d be best served by assigning a minimum of 4 cores to ensure that in the worst case, where an adapter can only process about 3 Gbps on each core, you’re getting the maximum out of your NICs.

Note: Some customers choose to skip the first core on the additional NUMAs. First, this is simpler to automate and second guarantees similar performance to all VMs regardless of which adapter services their received network traffic. This is a customer choice and is not required as shown by this example.

Next, run these commands to have pNIC01 assign VMQs to processors 1 - 9

Set-NetAdapterVmq -Name pNIC01 -BaseProcessorNumber 1 -MaxProcessorNumber 9 -MaxProcessors 9

Set-NetAdapterVmq -Name pNIC02 -BaseProcessorNumber 10 -MaxProcessorNumber 19 -MaxProcessors 10

BaseProcessorNumber: Numerically the lowest CPU core that can be engaged by queues on this NIC

- pNIC01 starts at core 1

- pNIC02 starts at core 10

MaxProcessorNumber: Numerically the maximum CPU core that can be engaged by queues on this NIC

- pNIC01 ends at core 9

- pNIC02 ends at core 19

MaxProcessors: The maximum number of CPU cores from the range above that can have VMQs assigned

- pNIC01 can assign VMQs to 9 processors

- pNIC02 can assign VMQs to 10 processors

Now the configuration looks like this:

Implications of the default queue

VMQs are a finite resource in the hardware. As you’ve likely seen from some of the earlier screen shots, NICs from those time periods did not have the same amount of resources as they do today and as a result could not provide as many queues.

By default, any virtual machine created would request a VMQ, however if the host does not have any more available to hand out, the VM will land on the default queue which is shared by all VMs that don’t have a dedicated queue. This poses two problems:

- If VMs share a queue they’re sharing the overall bandwidth that can be generated by the processing power on the selected CPU core

- As VMs live migrate, they will land on a new host that may not be able to provide them the same level of performance as the old host

Therefore, if you have a high amount of VMs, first make sure that you optimize the placement of your VMs. If you’ve “over-provisioned” the number of VMs compared to the number of queues available make sure to only allocate a queue to VMs that need it; decide which virtual adapters are the high bandwidth (e.g. 3 – 6 Gbps) or require consistent bandwidth and disable VMQ on the other VMs adapters.

To mark a virtual adapter as ineligible for a VMQ, use the Set-VMNetworkAdapter cmdlet and –VmqWeight 0 parameter. VmqWeight interprets the value 0 as disabled, and any non-zero value as enabled. There is no difference between different non-zero values.

Troubleshooting

Allocated Queues

To verify that a queue was allocated for a virtual machine use Get-NetAdapterVmqQueue and review the VMFriendlyName or MAC Address in the tables output.

Performance Monitor

Using performance monitor (start > run > perfmon) there are few counters that are helpful and can help evaluate VMQ. Add the following counters under the Hyper-V Virtual Switch Processor category:

- Number of VMQs – The number of VMQ processors that affinitize to that processor

- Packets from External – Packets indicated to a processor from any external NIC

- Packets from Internal – Packets indicated to a processor from any internal NIC, such as a vmNIC or vNIC

Packets processed on the wrong processor

During the 2012 timeframe, we often saw packets being processed on the wrong VMQ processors and not respecting the defined processor array. This is largely resolved through NIC driver and firmware updates.

Symptoms include a drastic unexplained dropped in throughput or just low throughput. This can be easily troubleshot using the counters above.

Summary of Requirements

To summarize what we’ve covered here, there are several requirements

- Install latest drivers and firmware

- Processor Array engaged by default – CPU0

- Configure system to avoid CPU0 on non-hyperthreaded systems and CPU0 and [Edit]

CPU2CPU1 on hyperthreaded systems (e.g. BaseProcessorNumber should be 1 or 2) - Configure the MaxProcessorNumber to establish that an adapter cannot use a processor higher than this

- Configure MaxProcessors to establish how many processors out of the available list a NIC can spread VMQs across simultaneously and maximize the adapters throughput. We recommend configuring this to the number of processors in the processor array

- Test the workload – Leave no stone unturned

Other best practices

These best practices should be combined with the information in the Summary of Requirements section above.

- Use sum-of-queues to maximize the number of available VMQs

- Don’t span a single adapters queues across NUMA nodes

- If using teaming, specify Switch Independent and Hyper-V Port or Dynamic to ensure you have the maximum number of VMQs available; we recommend Hyper-V Port

- If you have more VMs than VMQs, make sure to define which VMs get the VMQs so you have some semblance of consistent performance

- Do NOT disable RSS unless directed by Microsoft support for troubleshooting

Summary of Advantages

- Full Utilization of the NIC – Given adequate processing resources the full speed of the physical adapter attached to a virtual switch can be utilized if properly configured

Summary of Disadvantages

- Default Queue – There is only one default queue – All VMs that do not get their own queue will share this queue and will experience lower performance than other VMs

- Static Assignment – Since queues are statically assigned and cannot be moved once established, if sharing a CPU core with other “noisy” VMs, performance can be inconsistent

- One VMQ per virtual NIC – Only one queue is assigned to each vNIC or vmNIC – a single virtual adapter can reach a maximum of 6 Gbps on a well-tuned system

As you can see, there are a number of complex configuration options that must be considered when deploying VMQ in order to reach the maximum throughput of your system. In future articles, you'll see how we improve the reliability and performance, ultimately increasing your system's VM density in Windows Server 2012 R2, 2016, and 2019.

Thanks for reading,

Dan Cuomo

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.