- Home

- Exchange

- Exchange Team Blog

- Placement of the File Share Witness (FSW) on a Geographically Dispersed CCR Cluster

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Update 6/4/2008: Please note that we have posted our new guidance around this subject here: New File Share Witness and Force Quorum Guidance for Exchange 2007 Clusters.

Exchange Server 2007 introduced Continuous Cluster Replication (CCR), which utilizes the log shipping and replay features of Exchange 2007 combined with a Majority Node Set (MNS) cluster. CCR utilizes the Microsoft Cluster service to provide failover to the passive node. An Exchange 2007 CCR cluster can be configured with two nodes and a File Share Witness (FSW). FSW is an option that is recommended so that a third piece of hardware does not need be utilized. FSW takes the place of the voter node that was used prior to update 921181. This hotfix allows the use of FSW which is used as a quorum resource without using a shared disk. The FSW is used by the cluster node for arbitration. At least two of the three (when counting nodes or FSW) must be up and communicating to maintain a majority or any cluster resources and the cluster service will cleanly shutdown.

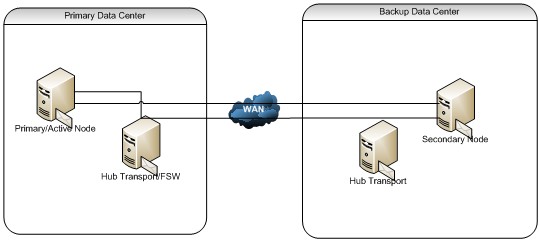

With a CCR cluster it is possible to place each node in a separate datacenter, however the nodes must be on the same subnet. This is a Windows Clustering limitation that will removed with Longhorn Server. With the nodes being in separate datacenters, where should you place the FSW? It is Microsoft's recommendation to place the FSW in the same datacenter with the preferred owning node of the CCR cluster. This recommendation is based on management and statistics. These statistics show the most common network failure is WAN, not LAN related. This means that the highest risk of communication failure between 'voters' in the CCR implementation is between the Active and Passive nodes when the cluster is geographically disbursed. If we place the FSW in the datacenter with the preferred owning node, a loss of communication between the datacenter's will not cause the cluster service to shutdown. We recommend the FSW to be placed on the Hub Transport Role Server.

Note: We also recommend that you create a CNAME record in DNS for the server hosting the share, instead of the actual server name. When creating the share for the file share witness use the fully-qualified domain name for the CNAME record instead of the server name, as this practice assists with site resilience.

Please see article 281308 for some configuration issues with using the CNAME to access the FSW (share):

We are assuming the following has been configured for the following examples:

- Creation of FSW in primary data center on the Hub Transport Server.

- CCR Geo-Cluster has been configured.

- IP subnets have been properly configured.

- FSW share has been previously configured in the Backup Data Center.

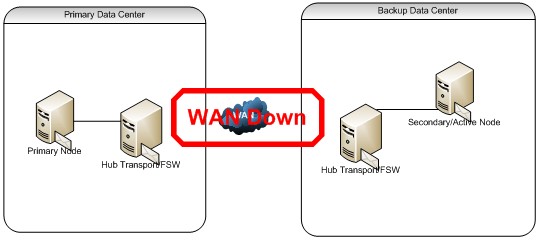

So what happens if we lose the primary datacenter? If this were to occur, we could not have an automatic failover to the passive node as we would have lost the active node as well as the FSW, and the cluster would no longer have a majority (counting both Nodes or FSW) so the cluster service on the passive node would shutdown. Manual intervention in this scenario is necessary to force the quorum and to redirect the FSW to a new share in the secondary datacenter:

1.) Update the CNAME to point to a Hub Transport Server in the Backup Data Center.

2.) Force the quorum to start the cluster service on the Secondary Node:

net start clussvc /forcequorum node_list

Please see article 258078 â?? Cluster service startup options for more details

3.) At this point the Cluster service will start and you should be able to bring your cluster resources on-line.

The FSW share at the secondary site should already exist. The recommended location is on the Hub Transport server in the backup datacenter. You can easily script the previous steps if desired, but the Primary Data Center failure will require manual intervention.

For the procedure to create and secure the file share for the FSW please refer to:

http://technet.microsoft.com/en-us/bb124922.aspx

What happens if the Primary Data Center comes back on-line, but the WAN link is down to the Backup Data Center? The following illustrates this scenario:

1.) When the Primary Node comes up in the Primary Data Center, it would not see the Secondary node.

2.) The Primary node would see the local FSW because it would not see the DNS update since the local DNS and the DNS in the backup data center have not synced.

3.) The FSW (in the Primary Data Center) would show that the Primary Node has a current version of the cluster database, then the Primary Node will form a cluster. At this point we have hit the Split Brain Syndrome (a scenario under which a MNS cluster is partitioned because of network communication failure and each partition considers itself as an owner and brings resources online).

To avoid hitting the Split Brain Syndrome, you can delete the files in the FSW in the primary datacenter before starting the Primary Node or don't start the server where the FSW resides. If the WAN link was restored and the Primary Node had not been started, propagate the DNS updates if possible so the Primary Node will use the FSW in the Backup Data Center.

Note: You should ensure that your Servers are not configured to auto-power back on. Most up-to-date server manufacturers have a BIOS configuration to control when the server powers back on. It can be set to remember previous power sate, turn on, or leave off. The servers should be set in a "leave off" configuration, so when power is restored t o the Data Center the server can be brought up in a controlled/managed way.

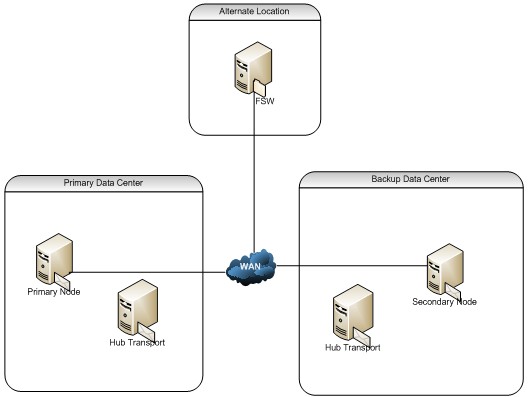

Using a 3rd Site to achieve Automatic Failover

If possible, you could use a 3rd site to host the FSW:

With the 3rd site, a failure at the Primary Data Center would allow for an automatic failover to the Secondary Node, as cluster keeps a majority of the voters (Secondary Node and FSW).

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.