- Home

- Exchange

- Exchange Team Blog

- Lessons from the Datacenter: Managed Availability

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Monitoring is a key component for any successful deployment of Exchange. In previous releases, we invested heavily in developing a correlation engine and worked closely with the System Center Operations Manager (SCOM) product group to provide a comprehensive alerting solution for Exchange environments.

Traditionally, monitoring involved collecting data and if necessary performing an action based on the collected data. For example, in the context of SCOM, different mechanisms were used to collect data via the Exchange Management Pack:

| Type of Monitoring | Exchange 2010 |

| Service not running | Health manifest rule |

| Performance counter | Health manifest counter rule |

| Exchange events | Health manifest event rule |

| Non-Exchange events | Health manifest event rule |

| Scripts, cmdlets | Health manifest script rule |

| Test cmdlets | Test cmdlets |

Exchange Server 2013 Monitoring Goals

When we started the development of Exchange 2013, a key focus area was improving end-to-end monitoring for all Exchange deployments, from the smallest on-premises deployment to largest deployment in the world, Office 365. We had three major goals in mind:

- Bring our knowledge and experience from the Office 365 service to on-premises customers For nearly 6 years, the Exchange product group has been operating Exchange Online. The operations model we use is known as the developer operations model (DevOps). In this model, issues are escalated directly to the developer of a feature if their feature is performing badly within the service or when a customer reports an unknown problem that is escalated. Regardless of the problem origin, escalation directly to the developer brings accountability to the development of the software by addressing the software defects.

As a result of using this model, we have learned a lot. For example, in the Exchange 2010 Management Pack, there are almost 1100 alerts. But over the years, we found that only around 150 of those alerts are useful in Office 365 (and we disable the rest). In addition, we found that when a developer receives an escalation, they are more likely to be accountable and work to resolving the issue (either through a code fix, through new recovery workflows, by fine tuning the alert, etc.) because the developer does not want to be continuously interrupted or woken up to troubleshoot the same problem over and over again. Within the DevOps model, we have a process where every week the on-calls will have a hand-off meeting to review of the past week’s incidents; the result is that in-house recovery workflows are developed, such as resetting IIS application pools, etc. Before Exchange 2013, we had our own in-house engine that handled these recovery workflows. This knowledge never made it into the on-premises products. With Exchange 2013, we have componentized the recovery workflow engine so that the learnings can be shared with our on-premises customers.

- Monitor based on the end user’s experience We also learned that a lot of the methodologies we used for monitoring really didn’t help us to operate the environment. As a result, we are shifting to a customer focused vision for how we approach monitoring.

In past releases, each component team would develop a health model by articulating all the various components within their system. For example, transport is made up of SMTP-IN, SMTP-OUT, transport agents, categorizer, routing engine, store driver, etc. The component team would then build alerts about each of these components. Then in the Management Pack, there are alerts that let you know that store driver has failed. But what is missing is the fact that the alert doesn’t tell you about the end-to-end user experience, or what might be broken in that experience. So in Exchange 2013, we are attempting to turn the model upside down. We aren’t getting rid of our system level monitoring because that is important. But what is really important, if you want to manage a service, is what are your users seeing? So we flipped the model, and are endeavoring to try and monitor the user experience.

- Protect the user’s experience through recovery oriented computing With the previous releases of Exchange, monitoring was focused on the system and components, and not how to recover and restore the end user experience automatically.

Monitoring Exchange Server 2013 - Managed Availability

Managed Availability is a monitoring and recovery infrastructure that is integrated with Exchange’s high availability solution. Managed Availability detects and recovers from problems as they occur and as they are discovered.

Managed Availability is user-focused. We want to measure three key aspects – the availability, the experience (which for most client protocols is measured as latency), and whether errors are occurring. To understand these three aspects, let’s look at an example, a user using Outlook Web App (OWA).

The availability aspect is simply whether or not the user can access the OWA forms-based authentication web page. If they can’t, then the experience is broken and that will generate a help desk escalation. In terms of experience, if they can log into OWA, what is the experience like – does the interface load, can they access their mail? The last aspect is latency –the user is able to log in and access the interface, but how fast is the mail rendered in the browser? These are the areas that make up the end user experience.

One key difference between previous releases and Exchange 2013, is that in Exchange 2013 our monitoring solution is not attempting to deliver root cause (this doesn’t mean the data isn’t recorded in the logs, or dumps aren’t generated, or that root cause cannot be discovered). It is important to understand that with past releases, we were never effective at communicating root cause - sometimes we were right, often times we were wrong.

Components of Managed Availability

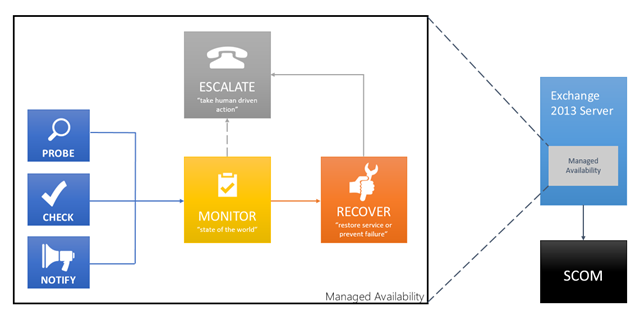

Managed Availability is built into both server roles in Exchange 2013. It includes three main asynchronous components. The first component is the probe engine. The probe engine’s responsibility is to take measurements on the server. This flows into the second component, which is the monitor. The monitor contains the business logic that encodes what we consider to be healthy. You can think of it as a pattern recognition engine; it is looking for different patterns within the the different measurements we have, and then it can make a decision on whether something is considered healthy or not. Finally, there is the responder engine, what I have labeled as Recover in the below diagram. When something is unhealthy its first action is to attempt to recover that component. Managed Availability provides multi-stage recovery actions – the first attempt might be to restart the application pool, the second attempt might be to restart service, the third attempt might be to restart the server, and the final attempt may be to offline the server so that it no longer accepts traffic. If these attempts fail, managed availability then escalates the issue to a human through event log notification.

You may also notice we have dece ntralized a few things. In the past we had the SCOM agent on each server and we’d stream all measurements to a central SCOM server. The SCOM server would have to evaluate all the measurements to determine if something is healthy or not. In a high scale environment, complex correlation runs hot. What we noticed is that alerts would take longer to fire, etc. Pushing everything to one central place didn’t scale. So instead we have each individual server act as an island – each server executes its own probes, monitors itself, and takes action to self-recover, and of course, escalate if needed.

Figure 1: Components of Managed Availability

Probes

The probe infrastructure is made up of three distinct frameworks:

- Probes are synthetic transactions that are built by each component team. They are similar to the test cmdlets in past releases. Probes measure the perception of the service by executing synthetic end-to-end user transactions.

- Checks are a passive monitoring mechanism. Checks measure actual customer traffic.

- The notify framework allows us to take immediate action and not wait for a probe to execute. That way we if we detect a failure, we can take immediate action. The notify framework is based on notifications. For example, when certificate expires, a notification event is triggered, alerting operations that the certificate needs to be renewed.

Monitors

Data collected by probes are fed into monitors. There isn’t necessarily a one-to-one correlation between probes and monitors; many probes can feed data into a single monitor. Monitors look at the results of probes and come to a conclusion. The conclusion is binary – either the monitor is healthy or it is unhealthy.

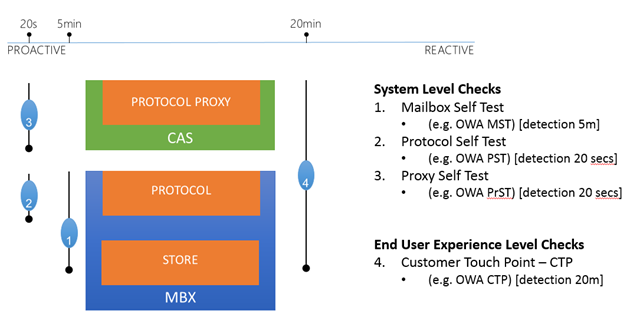

As mentioned previously, Exchange 2013 monitoring focuses on the end user experience. To accomplish that we have to monitor at different layers within the environment:

Figure 2: Monitoring at different layers to check user experience

As you can see from the above diagram, we have four different checks. The first check is the Mailbox self-test; this probe validates that the local protocol or interface can access the database. The second check is known as a protocol self-test and it validates that the local protocol on the Mailbox server is functioning. The third check is the proxy self-test and it executes on the Client Access server, validating that the proxy functionality for the protocol is functioning. The fourth and last check is the all-inclusive check that validates the end-to-end experience (protocol proxy to the store functions). Each check performs detection at different intervals.

We monitor at different layers to deal with dependencies. Because there is no correlation engine in Exchange 2013, we try to differentiate our dependencies with unique error codes that correspond to different probes and with probes that don’t include touching dependencies. For example, if you see a Mailbox Self-Test and a Protocol Self-test probe both fail at the same time, what does it tell you? Does it tell you store is down? Not necessarily; what it does tell you is that the local protocol instance on the Mailbox server isn’t working. If you see a working Protocol Self-Test but a failed Mailbox Self-Test, what does that tell you? That scenario tells you that there is a problem in the “storage” layer which may actually be that the store or database is offline.

What this means from a monitoring perspective, is that we now have finer control over what alerts are issued. For example, if we are evaluating the health of OWA, we are more likely to delay firing an alert in the scenario where we have a failed Mailbox Self-Test, but a working Protocol Self-Test; however an alert would be fired if both the Mailbox Self-Test and Protocol Self-Test monitors are unhealthy.

Responders

Responders execute responses based on alerts generated by a monitor. Responders never execute unless a monitor is unhealthy.

There are several types of responders available:

- Restart Responder Terminates and restarts service

- Reset AppPool Responder Cycles IIS application pool

- Failover Responder Takes an Exchange 2013 Mailbox server out of service

- Bugcheck Responder Initiates a bugcheck of the server

- Offline Responder Takes a protocol on a machine out of service

- Escalate Responder escalates an issue

- Specialized Component Responders

The offline responder is used to remove a protocol from use on the Client Access servers. This responder has been designed to be load balancer-agnostic. When this responder is invoked, the protocol will not acknowledge the load balancer health check, thereby enabling the load balancer to remove the server or protocol from the load balancing pool. Likewise, there is a corresponding online responder that is automatically initiated once the corresponding monitor becomes healthy again (assuming there are no other associated monitors in an unhealthy state) – the online responder simply allows the protocol to respond to the load balancer health check, which enables the load balancer to add the server or protocol back into the load balancer pool. The offline responder can be invoked manually via the Set-ServerComponentState cmdlet, as well. This enables administrators to manually put Client Access servers into maintenance mode.

When the escalate responder is invoked, it generates a Windows event that the Exchange 2013 Management Pack recognizes. It isn’t a normal Exchange event. It’s not an event that says OWA is broken or we’ve had a hard IO. It’s an Exchange event that that a health set is unhealthy or healthy. We use single instance events like that to manipulate the monitors inside SCOM. And we’re doing this based on an event generated in the escalat e responder as opposed to events spread throughout the product. Another way to think about it is a level of indirection. Managed Availability decides when we flip a monitor inside SCOM. Managed Availability makes the decision as to when an escalation should occur, or in other words, when a human should get engaged.

Responders can also be throttled to ensure that the entire service isn’t compromised. Throttling differs depending on the responder:

- Some responders take into account the minimum number of servers within the DAG or load balanced CAS pool

- Some responders take into account the amount of time between executions.

- Some responders take into account the number of occurrences that the responder has been initiated.

- Some may use any combination of the above.

Depending on the responder, when throttling occurs, the responder’s action may be delayed or simply skipped.

Recovery Sequences

It is important to understand that the monitors define the types of responders that are executed and the timeline in which they are executed; this is what we refer to as a recovery sequence for a monitor. For example, let’s say the probe data for the OWA protocol (the Protocol Self-Test) triggers the monitor to be unhealthy. At this point the current time is saved (we’ll refer to this as T). The monitor starts a recovery pipeline that is based on current time. The monitor can define recovery actions at named time intervals within the recovery pipeline. In the case of the OWA protocol monitor on the Mailbox server, the recovery sequence is:

- At T=0, the Reset IIS Application Pool responder is executed.

- If at T=5 minutes the monitor hasn’t reverted to a healthy state, the Failover responder is initiated and databases are moved off the server.

- If at T=8 minutes the monitor hasn’t reverted to a healthy state, the Bugcheck responder is initiated and the server is forcibly rebooted.

- If at T=15 minutes the monitor still hasn’t reverted to a healthy state, the Escalate responder is triggered.

The recovery sequence pipeline will stop when the monitor becomes healthy. Note that the last named time action doesn’t have to complete before the next named time action starts. In addition, a monitor can have any number of named time intervals.

Systems Center Operations Manager (SCOM)

Systems Center Operations Manager (SCOM) is used as a portal to see health information related to the Exchange environment. Unhealthy states within the SCOM portal are triggered by events written to the Application log via the Escalate Responder. The SCOM dashboard has been refined and now has three key areas:

- Active Alerts

- Organization Health

- Server Health

The Exchange Server 2013 SCOM Management Pack will be supported with SCOM 2007 R2 and SCOM 2012.

Overrides

With any environment, defaults may not always be the optimum condition, or conditions may exist that require an emergency action. Managed Availability includes the ability enable overrides for the entire environment or on an individual server. Each override can be set for a specified duration or to apply to a specific version of the server. The *-ServerMonitoringOverride and *-GlobalMonitoringOverride cmdlets enable administrators to set, remove, or view overrides.

Health Determination

Monitors that are similar or are tied to a particular component’s architecture are grouped together to form health sets. The health of a health set is always determined by the “worst of” evaluation of the monitors within the health set – this means that if you have 9 monitors within a health set and 1 monitor is unhealthy, then the health set is considered unhealthy. You can determine the collection of monitors (and associated probes and responders) in a given health set by using the Get-MonitoringItemIdentity cmdlet.

To view health, you use the Get-ServerHealth and Get-HealthReport cmdlets. Get-ServerHealth is used to retrieve the raw health data, while Get-HealthReport operates on the raw health data and provides a current snapshot of the health. These cmdlets can operate at several layers:

- They can show the health for a given server, breaking it down by health set.

- They can be used to dive into a particular health set and see the status of each monitor.

- They can be used to summarize the health of a given set of servers (DAG members, or load-balanced array of CAS).

Health sets are further grouped into functional units called Health Groups. There are four Health Groups and they are used for reporting within the SCOM Management Portal:

- Customer Touch Points – components with direct real-time, customer interactions (e.g., OWA).

- Service Components – components without direct, real-time, customer interaction (e.g., OAB generation).

- Server Components – physical resources of a server (e.g., disk, memory).

- Dependency Availability – server’s ability to call out to dependencies (e.g., Active Directory).

Conclusion

Managed Availability performs a variety of health assessments within each server. These regular, periodic tests determine the viability of various components on the server, which establish the health of the server (or set of servers) before and during user load. When issues are detected, multi-step corrective actions are taken to bring the server back into a functioning state; in the event that the server is not returned to a healthy state, Managed Availability can alert operators that attention is needed.

The end result is that Managed Availability focuses on the user experience and ensures that while issues may occur, the experience is minimally impacted, if at all, impacted.

| Ross Smith IV | Greg "The All-father of Exchange HA" Thiel |

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.