- Home

- Education Sector

- Educator Developer Blog

- Cloud Computing - Data Science and Mining Team Laboratoire d'informatique de l'École polytechnique

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The DaSciM team is part of the Computer Science Laboratory (LIX) of École Polytechnique. In the previous years we have conducted research in the areas of databases and data mining. More specifically in unsupervised learning (clustering algorithms and validity measures), advanced data management and indexing (P2P systems, distributed indexing, distributed dimensionality reduction), text mining (word disambiguation for classification, introduced the Graph of Words approach) and ranking algorithms (temporal extensions to PageRank). More recently, we worked in large scale graph mining (degeneracy based community detection and evaluation), text mining and retrieval for web advertising/marketing and recommendations.

Moreover our group has a long experience in real-world R&D projects in the area of Large Scale Data/Text/time series Mining. Currently we maintain collaborations with industrial partners (including AIRBUS, Google, BNP, Tencent, Tradelab) working on machine learning projects.

Data Science and Cloud Computing

The last decade we are moving through the Big Data era, where numerous services and applications generate huge volumes of data. As a result, traditional data science is adapting to be able to examine and process these volumes of data. Therefore, the requirements in terms of algorithmic complexity and hardware resources are gradually increasing.

The huge volumes of data and the high computation capabilities led to the rise of deep learning. For the time being, neural networks constitute the state of the art in most data mining fields as more and more ground breaking publications capitalize on their success. But, training models in deep learning usually requires huge data sets. Computing huge and complex jobs takes up a lot of time on CPU. The proposed workaround is to use a GPU to compute a lot of jobs in a short period. But this trend is still recent and requires hardware upgrades, therefore, it still being in development.

Cloud computing is able to assist data science as a way to follow state of the art and work with big data without the need of frequent hardware upgrades. These features, along with the shareability that it offers, is extremely useful in an academic setting, where students have very limited resources.

DaSciM and Microsoft Azure Services

To improve the quality of teaching and allow students to get in touch with real-world data sets and problems, our team augmented the course material by providing cloud based support that carrying out a couple of use cases.

Use case 1a: Group Lab Projects

One of the most popular types of assignments are group projects. Students are divided into groups and they are asked to cooperate. Cloud computing allows students to have access on Virtual Machines of the same specifications without worrying about resources. Group members are easily collaborating on one machine without the need of external services.

Usually, these kind of projects are either based on natural language processes (e.g. predictive models as XGBoost [1] or Random Forest [3]) or graph based (as DeepWalk [4] or Node2Vec [2]). As such, we are mostly using Python and tools like Numpy, SciPy, Scikit-Learn, Gensim and NetworkX. To increase the engagement of students we are using in-class Kaggle competitions. On such competitions, groups get access on a percentage of the data for training and testing and have to compete with each other. When the competition finishes the groups are ranked using a held-out, hidden, part of the data. This ranking constitutes a small part of the grade, the rest being the actual solution and documentation.

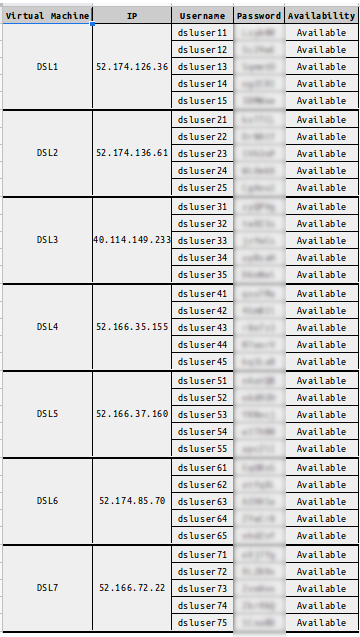

The process that we followed in the past was to generate a number of Virtual Machines capable of serving up to 4 groups. The hardware specification for each machine depends on the task but we always prefer the Ubuntu-based Data Science image. As an example, the table that follows is the one that we used to distribute the virtual machines per group.

Our immediate plan is to replace our process by using the Azure Lab Service which was introduced last year. This will allow us to better manage and coordinate such scenarios but will also engage the students with cloud computing.

Use case 1b: Resource demanding Lab Projects

Due to the engagement of our team with deep learning, using GPUs is mandatory. In most cases, students lack access to a GPU and therefore are unable to use some of the popular deep learning algorithms. Although we avoid to assign deep learning projects that are unable to be executed on a CPU, we encourage students to ask us for access on GPU resources to experiment with. In regards to frameworks, our assignments and teaching are based on Python and either Keras or Tensorflow.

In a recent assignment, students had to solve a multi-target graph regression problem with a Deep Learning architecture for natural language processing, the Hierarchical Attention Network (HAN) [5]. Usually this kind of assignments are, again, in the form of a in-class Kaggle competition where students have to compete with each other. The task required the students to use and modify (e.g. adding layers or connections) the Keras-based HAN architecture that was provided and which required a couple of hours of training on CPU.

Use case 2: Resource demanding Research

Since our team is also actively involved in research using big data we have to face some real-world data sets that are far more demanding than expected. As a recent example, we had to deal with a data set of size over 300Gb of disk space and was impossible to use on a single workstation since it had to fit in memory as a whole while distributed computing was not an option since the algorithms used were not designed as such.

Future steps

In the future we plan to expand the range of Azure Services that we are using. Azure Lab seems quite useful for lab environments as ours and Azure Notebooks using a centralized server (as Little Jupyter Hub Servers) could improve our lab lectures by eliminating software installation problems. Finally, we also plan to examine the recently released Machine Learning Studio in order to decide if it fits our teaching environment.

[1] Chen, Tianqi, and Carlos Guestrin. "Xgboost: A scalable tree boosting system." Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining. ACM, 2016.

[2] Grover, Aditya, and Jure Leskovec. "node2vec: Scalable feature learning for networks." Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, 2016.

[3] Liaw, Andy, and Matthew Wiener. "Classification and regression by randomForest." R news 2.3 (2002): 18-22.

[4] Perozzi, Bryan, Rami Al-Rfou, and Steven Skiena. "Deepwalk: Online learning of social representations." Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, 2014.

[5] Yang, Zichao, et al. "Hierarchical attention networks for document classification." Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 2016.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.