- Home

- Microsoft Intune and Configuration Manager

- Device Management in Microsoft

- How we upgrade 300k ConfigMgr clients in 10 days

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Client Health

It all starts with ConfigMgr client health. If clients aren't healthy then getting them to upgrade is hard. Getting and keeping clients healthy is a daily activity we have people focused on and that provides us with a good foundation to start with.

Early testing

When we roll out a new build the first thing we do is send it to our test hierarchy, which we refer to as "PPE". We will upgrade the hierarchy and then perform some automated tests against that environment covering various areas, including ConfigMgr client health. We test in two scenarios, existing client interacting with an upgraded server side (site/Management Point/Distribution Point, etc.), and then again with a client that is upgraded to the new client bits and going through basic functionality tests. Those automated tests take about 12 minutes to run. We refer to these tests as our Quality Control, or "QC" tests.

Production testing

Once we upgrade our production (PROD) hierarchy we use the pre-production client functionality of the product to target out the new ConfigMgr client to a small subset of actual production machines. This is actual Microsoft employee machines as well as a few test machines. For those test machines we again run our QC tests, taking about another 12 minutes. Combined with all our other QC tests (run in PPE and PROD) we reach a point of being ready for full client deployment in about 5 hours. Due to all the other things we have going on, combined with a little bit of caution and risk management, we usually let things sit for a day post upgrade.

6 day push (kind of)

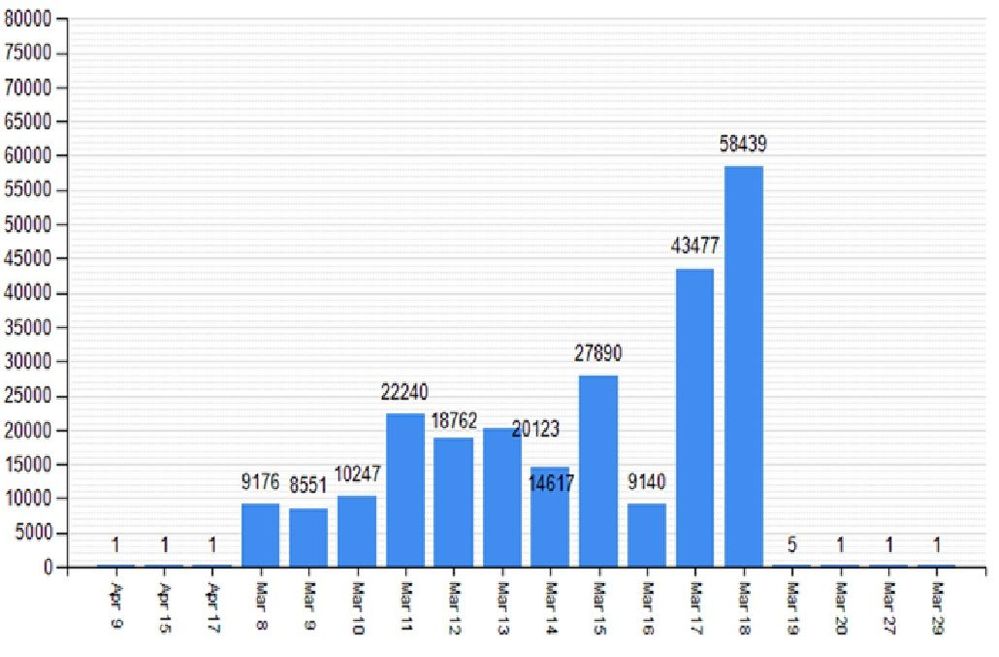

The day after upgrade we check our telemetry, such as the ConfigMgr client status in the ConfigMgr admin console, and if it all looks good then we promote the new client to be our production client. Prior to the hierarchy upgrade we had disabled auto-client upgrades so it is at this point that we enable it again. We have it set to upgrade over 6 days, which we find as reasonable for our ~300,000 clients. We will see a surge of traffic against our Management Point and, lately, our Cloud Management Gateway (CMG) but we are built to expect this and prepared to scale up servers if it becomes necessary. There is a small trick here, however. We used to set to 10 days but didn't reach the numbers we wanted to hit in those 10 days. By setting the system for 6 days we find that we force clients to schedule their upgrades but then machines that are off for weekends or traveling and offline and our "trailing machines" are less after 10 days. Here is an example of what the outcome ends up being for us:

This leaves us with a "long tail" to chase. The last 10-20% of machines are often either on vacation or completely gone but still in our system (being a software development company means we have a lot of test machines in our environment).

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.