- Home

- Security, Compliance, and Identity

- Microsoft Sentinel Blog

- Best practices for designing a Microsoft Sentinel or Azure Defender for Cloud workspace

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Note: a lot has been updated since this article was written. We now have official guidelines in our documentation: Extend Microsoft Sentinel across workspaces and tenants. You may also want to review the Webinar on this topic: MP4, YouTube, Presentation

A workspace design decision tree can be found here , as well as workspace design samples which can be found here

Executive Summary

When you register the Microsoft.Security Resource Provider (RP) for a subscription and want to start using Microsoft Defender for Cloud or when you want to use Microsoft Sentinel, you are confronted with workspace design choices which will affect your experience going forward.

The top 8 best practices for an optimal Log Analytics workspace design:

- Use as few Log Analytics workspaces as possible, consolidate as much as you can into a “central” workspace

- Avoid bandwidth costs by creating “regional” workspaces so that the sending Azure resource is in the same Azure region as your workspace

- Explore Log Analytics RBAC options like “resource centric” and “table level” RBAC before creating a workspace based on your RBAC requirements

- Consider “Table Level” retention when you need different retention settings for different types of data

- Use ARM templates to deploy your Virtual Machines, including the deployment and configuration of the Log Analytics VM extension. Ensure alignment with Azure Policy assignments to avoid conflicts

- Use Azure Policy to enforce compliance for installing and configuring Log Analytics VM extension. Ensure alignment with your DevOps team if using ARM templates

- Avoid multi-homing, it can have undesired outcomes. Strive to resolve by applying proper RBAC

- Be selective in installing Azure monitoring solutions to control ingestion costs

Choosing the right technical design versus the right licensing model

In the on-premises world, a technical design would dominantly be CapEx driven. In a pay-as-you-go model - the Azure model - it is primarily OpEx driven. OpEx will more likely drive a Log Analytics workspace design based on the projection of costs; related to data sent and ingested. This is a valid concern but if wrongly addressed, it can have a negative OpEx outcome based on operational complexities when using the data in Microsoft Defender for Cloud or Microsoft Sentinel. The additional OpEx costs, caused by operational complexities, are often hidden and are less clear as your monthly bill. This document aims to address those complexities to help you make the right design choice.

Please refer to Designing your Azure Monitor Logs deployment for additional recommended reading.

1. Use as few Log Analytics workspaces as possible

Recommendation: Use 1 or more central (regional) workspace(s)

Having a single workspace is technically the best choice to make, it provides you the following benefits:

All data resides in one place

- Efficient, fast and easy correlation of your data

- Full support of creating analytics rules for Microsoft Sentinel

- 1 RBAC and delegation model to design

- Simplified dashboard authoring, using Azure Workbooks, avoiding cross-workspace queries

- Easier manageability and deployment of the Azure Monitor VM extension, you know which resource is sending data to what workspace

- Prevents autonomous workspace sprawl

- 1 Clear licensing model, versus a mix of free and paid workspaces

- Getting insights in costs and consumption is easier with one workspace

Having one single workspace also has the following (current) disadvantages:

- 1 Licensing model - this can be a disadvantage if you do not care about long term storing your data for specific data types*

- All data share the same retention settings*

- Configuring fine grained RBAC requires more effort

- If data is sent from Virtual Machines outside the Azure data center region where your workspace resides, it will incur costs

- Charge back is harder, versus every business unit having their own workspace

*) Table retention support is on the roadmap

2. Avoid bandwidth costs by creating “Regional” workspaces

Recommendation: Locate your workspace in the same region as your Azure resources

If your Azure resources are deployed outside your Azure workspace region, additional bandwidth costs will negatively affect your monthly Azure bill.

|

Azure Regions A region is a set of datacenters deployed within a latency-defined perimeter and connected through a dedicated regional low-latency network. |

All inbound (ingress) data transfers to Azure data centers from, for example, on-premises resources or other clouds, are free. However, Azure outbound (egress) data transfers from one Azure region to another Azure region, incur charges.

At the writing of this blog, there are currently 54 regions in 140 countries available. Please check this link for the latest updates.

All outbound traffic between regions is being charged, pricing information can be found here. All inbound traffic is free of charge. For example sending data from Europe West and Europe North will be charged.

The following illustration shows a Log Analytics design based on regional workspaces:

3. Explore Log Analytics RBAC options

Designing the appropriate RBAC model for a Log Analytics workspace before the actual deployment is key. Test driving RBAC requirements, mapped to roles and permissions in a Proof of Concept (PoC) environment provides a good operational insight.

Multi-homing your agents (discussed later in this document), in order to separate data, is one of the use cases affecting your workspace design. When using a central workspace, there are two options which will support the requirement that specific data types are not accessible by unauthorized personas:

- Table Level RBAC - allows you to delegate permission based on a specific data type, like Security Events

- Resource Centric RBAC - only provides access to the data if the user has access to the resource, as shown in the screenshot below where the viewer has VM reader access:

Fig. 2 - Log Analytics Resource Centric RBAC - projected by accessing a VM

4. Consider “Table Level” retention

“Table Level” retention will allow you to apply a different retention setting for specific Log Analytics tables. This eliminates the need to create a separate workspace when you need different retention settings for specific data types. For example, if you need to comply with a regulation which dictates that you need to save security alerts for 2 years, but there is no need to save performance counters longer than 7 days, then Table Level retention is going to solve that challenge.

Please note that Table Level retention is not yet available and is on our backlog.

5. Use ARM templates to deploy your Virtual Machines

Recommendation: Use ARM templates to deploy your Virtual Machines, including the installation and configuration of the Log Analytics VM extension

Part of your Log Analytics workspace design is how your agents are deployed and configured. The recommended agent deployment type for Azure Virtual Machines is using the Log Analytics VM extension. The main reason is that a VM extension is deployed and managed by Azure Resource Manager (ARM) and fully supports a CI/CD pipeline DevOps scenario. When using the Log Analytics Agent not installed through the VM extension, updating the agent - or configuring the Log Analytics workspace settings - requires direct interaction with the Virtual Machine. This is not necessary when using the VM extension. ARM templates - where you declare your deployment - are idempotent, meaning that you can repeatedly deploy the same ARM template without breaking anything.

The following ARM template resources section snippet shows how to include the Log Analytics VM extension part of the VM deployment and provide the workspace settings:

{

"type": "extensions",

"name": "OMSExtension",

"apiVersion": "[variables('apiVersion')]",

"location": "[resourceGroup().location]",

"dependsOn": [

"[concat('Microsoft.Compute/virtualMachines/', variables('vmName'))]"

],

"properties": {

"publisher": "Microsoft.EnterpriseCloud.Monitoring",

"type": "MicrosoftMonitoringAgent",

"typeHandlerVersion": "1.0",

"autoUpgradeMinorVersion": true,

"settings": {

"workspaceId": "myWorkSpaceId"

},

"protectedSettings": {

"workspaceKey": "myWorkspaceKey"

}

}

}

Fig. 3 - ARM template snippet example how to deploy and configure the Log Analytics VM Extension

Please ensure alignment with Azure Policy assignments to avoid conflicts upon deployments.

6. Use Azure Policy to enforce compliance

Recommendation: Use Azure Policy to enforce compliance for installing and configuring Log Analytics VM extension

For enforcing compliance at scale across your tenant(s), Azure Policy is the recommended strategy and is powered by ARM. Capabilities to enforce compliance upon deployment, but also after a resource has been deployed, makes it a powerful solution to prevent and correct non-compliant resources. Azure Policy should be part of your design.

When authoring an Azure Policy definition, you can decide under which conditions the Log Analytics VM extension is deployed. For example, if your design consists of regional workspaces, you can configure the workspace settings based on the resource location so that a VM deployed in West US 2 will be configured to report to a workspace residing in West US 2. The following Azure Policy definition snippet demonstrates this, using a location property:

"policyRule": {

"if": {

"allOf": [

{

"field": "type",

"equals": "Microsoft.Compute/virtualMachines"

},

{

"field": "Microsoft.Compute/imagePublisher",

"equals": "MicrosoftWindowsServer"

},

{

"field": "location",

"equals": "[parameters('location')]"

}

]

}

}

Fig. 4 - Azure Policy definition snippet to support regional workspaces

Ensure alignment with your DevOps team if using ARM templates to avoid conflicts.

7. Avoid multi-homing, it can have undesired outcomes

Every best practice has exceptions. If you are not concerned about…

- Potential double billing (data could be sent twice)

- Adding complexity to your agent lifecycle management - ARM and Azure Policy do not support multi-homed deployments

- Authoring complex correlation queries

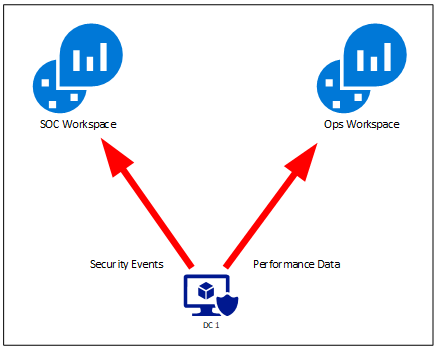

…Then multi-homing might be for you, but for most of the use cases, you will add unnecessary complexity to your workspace design. The most common consideration to implement multi-homing is separation of data, e.g. security events will be sent to workspace A, performance data will be sent to workspace B, as depicted in the illustration below:

Fig. 5 - Multi-homed agent

What might appear as a use case for separating data, originates often from the requirement to prevent data being accessed by an unauthorized party. For example, a Security Operations Center (SOC) might want to prevent that the first line helpdesk is able to view security events for a specific group of computers, like domain controllers.

Please note that multi-homing is not supported using the Log Analytics agent for Linux.

8. Be selective in installing Azure monitoring solutions

Recommendation: Be selective in installing Azure monitoring solutions to control ingestion costs

One of the most common questions asked related to cost & billing are “Which resources are responsible for a high monthly bill?”. Providing an answer to this question can be challenging. It is important to understand the factors driving costs. In one of the previous sections, egress traffic costs have already been mentioned. Another factor is the solutions which will send data to your workspace.

Azure monitor offers a number of monitoring solutions which provide additional insight into the operation of a particular application or service. These solutions will store their data in your workspace and will affect ingestion costs. How these solutions are configured related to data collection, differs per solution.

For example, Azure Security Center offers the option to configure the log level for Windows based agents:

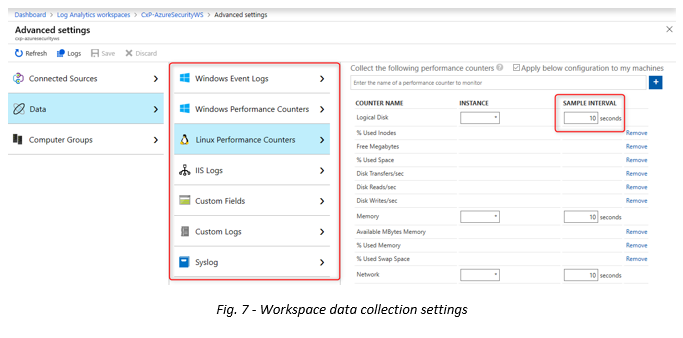

Settings that affect data ingestion, can also be found in the workspace advanced settings. This contains multiple options for configuring the log collection interval, but also for example, the Syslog facilities (not on the screenshot below):

Analyzing workspace costs

Each Log Analytics workspace has the option to explore usage details, as shown in the screenshot below:

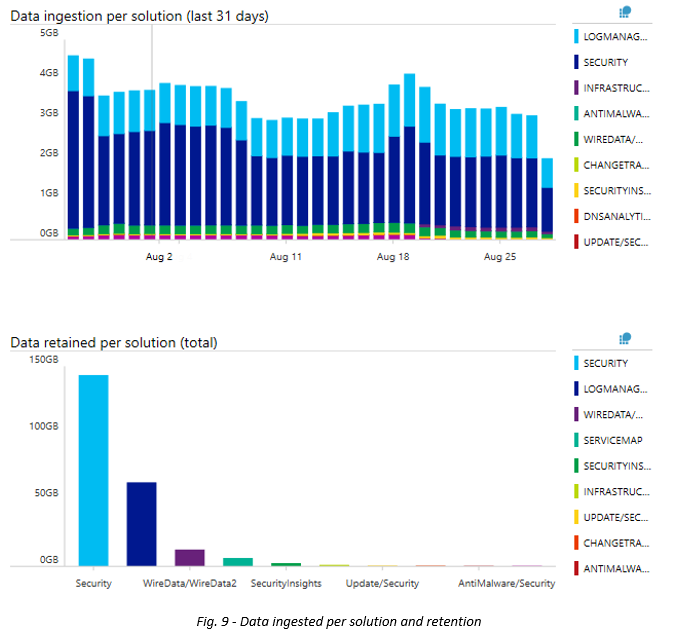

This can be visualized with an out of the box bar chart, based on the data ingestion per solution and data retained per solution:

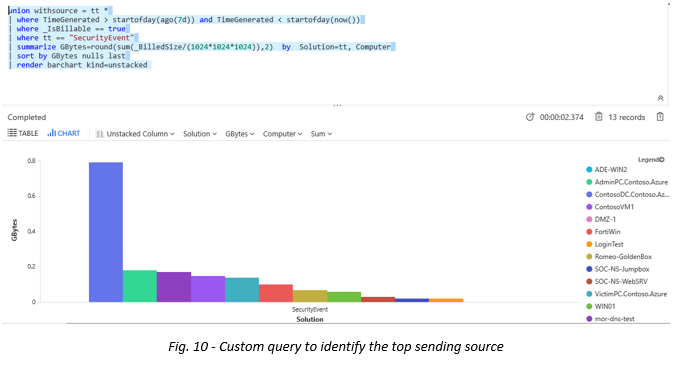

For a more detailed analysis, it is recommended to get acquainted with the workspace query language called “Kusto”. There are a number of helpful queries which can be found here. These queries can be customized, like for example to identify which computer is sending the most Security Events over the last 7 days:

union withsource = tt *

| where TimeGenerated > startofday(ago(7d)) and TimeGenerated < startofday(now())

| where _IsBillable == true

| where tt == "SecurityEvent"

| summarize GBytes=round(sum(_BilledSize/(1024*1024*1024)),2) by Solution=tt, Computer

| sort by GBytes nulls last

| render barchart kind=unstacked

This allows you to quickly identify the top computers sending data.