Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Microsoft Developer Community Blog

URL

Copy

Options

Author

invalid author

Searching

# of articles

Labels

Clear

Clear selected

Accessibility

AI

AI Studio

AKS

AMD

annotation

anomaly detection

Anomaly Detector

API Design

App Service

Artificial Intelligence

Azure

AzureAI

Azure AI Search

Azure AI Services

Azure Cache for Redis

Azure Confidential Computing

Azure Data

Azure Databricks

Azure Developer

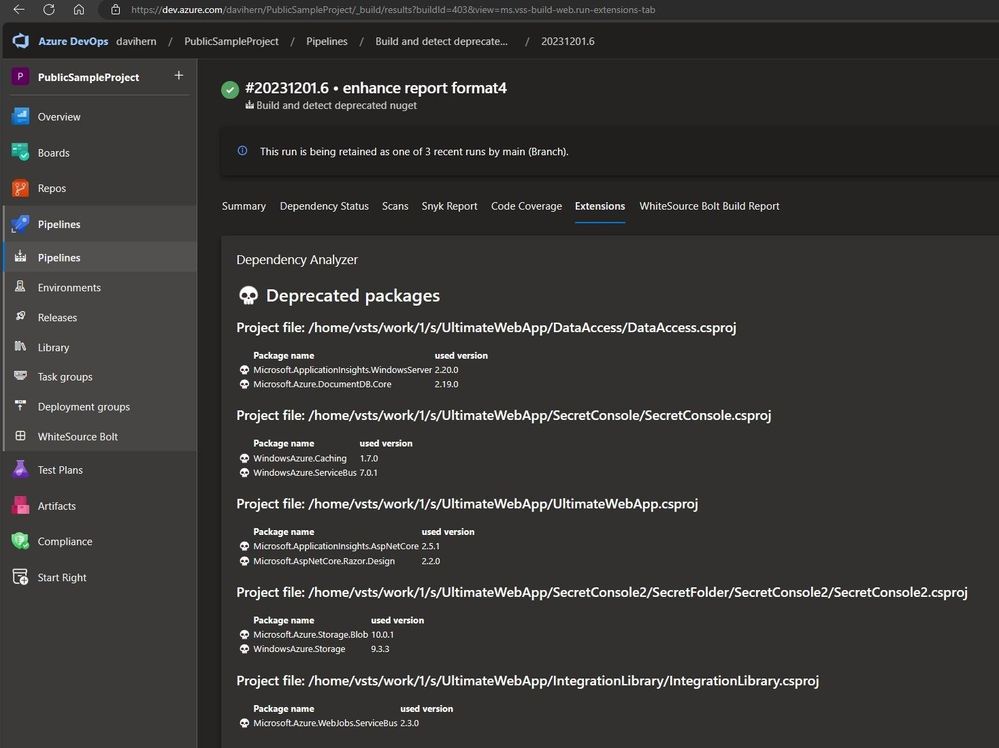

Azure DevOps

Azure Load Testing

Azure Machine Learning

Azure ML

Azure Search

AzureSQL

Azure SQL DB

Azure Synapse

Best Practices

Bruno Capuano

Carlotta Castelluccio

chat bot

CodeStories

ContributorStories

copilot

Deep Learning

Developer

DevOps

Doccano

E2E Solutions

Feast

Feature Store

Functions

Get Started

github

Gwyneth Pena-Siguenza

Gwyneth Peña-Siguenza

Hugging Face

Image Annotation

Insurance

IoT

Keypoint Detection

knowledge mining

Laurent Bugnion

Learning

LLM

LUIS

Machine Learning

Microsoft Build 2021

Microsoft Build 2022

Microsoft Build 2023

Microsoft Dev Box

Microsoft Fabric

Microsoft Ignite 2021

Microsoft Ignite 2023

Missing Value Imputation

ML

MLOps

mvp

National Language Processing

NER

Neural Machine Translation

Next Best Question

NLP

NMT

Object Segmentation

ollama

Phi-3

Python

Pytorch

Redis

Resources

scikit-learn

Semantic Kernel

Serverless

Service Fabric

SLM

Software Architecture

tensorflow

Time Series

Tips and Tricks

visual studio

Web Apps

youtube

- Home

- Azure

- Microsoft Developer Community Blog

- Microsoft Developer Community Blog - Page 2

Options

- Mark all as New

- Mark all as Read

- Pin this item to the top

- Subscribe

- Bookmark

- Subscribe to RSS Feed

Latest Comments