- Home

- Azure Data

- Azure Database for PostgreSQL Blog

- Copy data from Azure Blob to Azure Database for PostgreSQL using Azure Data Factory

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure Data Factory is a fully managed data integration service that allows you to create data-driven workflows in a code free visual environment in Azure for orchestrating and automating data movement and data transformation. Azure Database for PostgreSQL is now a supported sink destination in Azure Data Factory. Azure Data factory can be leveraged for secure one-time data movement or running continuous data pipelines which loads data into Azure Database for PostgreSQL from disparate data sources running on-premises, in Azure or other cloud providers for analytics and reporting.

In this tutorial, you create a Data Factory pipeline that copies data from Azure Blob Storage to Azure Database for PostgreSQL. The configuration pattern in this tutorial applies to copying from a file-based data store to a relational data store.

Prerequisites

If you don't have an Azure subscription, create a free account before you begin.

Azure Storage account. You use the blob storage as source data store. If you do not have an Azure storage account, see the Create a storage account article for steps to create one.

Azure Database for PostgreSQL. You use the database as sink data store. If you do not have an Azure Database for PostgreSQL, see the Create an Azure Database for PostgreSQL article for steps to create one.

You can provision the prerequisites quickly using this azure-quickstart-template :

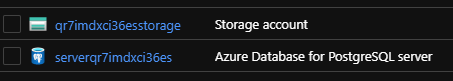

Once you deploy the above template, you should see the following resources in your resource group:

Create a blob and a PostgreSQL table

Now, prepare your Azure Blob and Azure Database for PostgreSQL for the tutorial by performing the following steps:

Create a source blob

1. Launch Notepad. Copy the following text and save it as employee.txt file on your disk.

John, Doe Jane, Doe

2. Use tools such as Azure Storage Explorer to create a container named “adftutorial”, and to upload the “employee.txt” file to the container in a folder named “input”

Create a sink PostgreSQL table

1. Use the following SQL script to create the public.employee table in your Azure Database for PostgreSQL :

CREATE TABLE public.employee

(

ID INT NOT NULL GENERATED ALWAYS AS IDENTITY,

FirstName VARCHAR NOT NULL,

LastName VARCHAR NOT NULL

)

2. Allow Azure services to access Azure Database for PostgreSQL Server. Ensure that Allow access to Azure services setting is turned ON for your Azure Database for PostgreSQL Server so that the Data Factory service can write data to your Azure Database for PostgreSQL Server. Here are the instructions to verify and turn on this setting.

Important: This option configures the firewall to allow all connections from Azure including connections from the subscriptions of other customers. When selecting this option, make sure your login and user permissions limit access to only authorized users.

Create Azure Data Factory

The following template creates a data factory of version 2 with a pipeline that copies data from a folder in an Azure Blob Storage to a table in an Azure Database for PostgreSQL.

Once the template is deployed successfully, you can monitor status of ADF copy activity by running the following commands in PowerShell:

1. Launch Azure PowerShell.

2. Download runmonitor.ps1 to a folder on your machine.

Invoke-WebRequest -Uri https://raw.githubusercontent.com/Azure/azure-quickstart-templates/master/101-data-factory-v2-blob-to-postgresql-copy/scripts/runmonitor.ps1 -OutFile runmonitor.ps1

3. Install the Azure PowerShell module

Install-Module -Name AzureRM -AllowClobber

4. Run the following command to log in to Azure.

Login-AzureRmAccount

5. Run the following command to select the azure subscription in which the data factory exists:

Select-AzureRmSubscription -SubscriptionId "<Subscription Id>"

6. Switch to the folder where you downloaded the script file runmonitor.ps1

7. Run the following command to monitor copy activity after specifying the names of your Azure resource group and the data factory.

.\runmonitor.ps1 -resourceGroupName "<name of your resource group>" -DataFactoryName "<name of your data factory>"

If the Status is Failed, you can check the error message printed out.

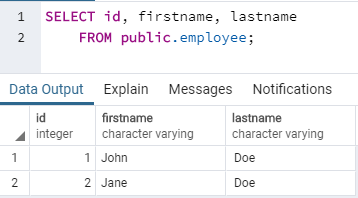

If the Status is Succeeded, you can view the new data ingested in PostgreSQL table:

If you have trouble deploying the ARM Template, please let us know by opening an issue.

Feel free to contribute any updates or bug fixes by creating a pull request.

Thank you!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.